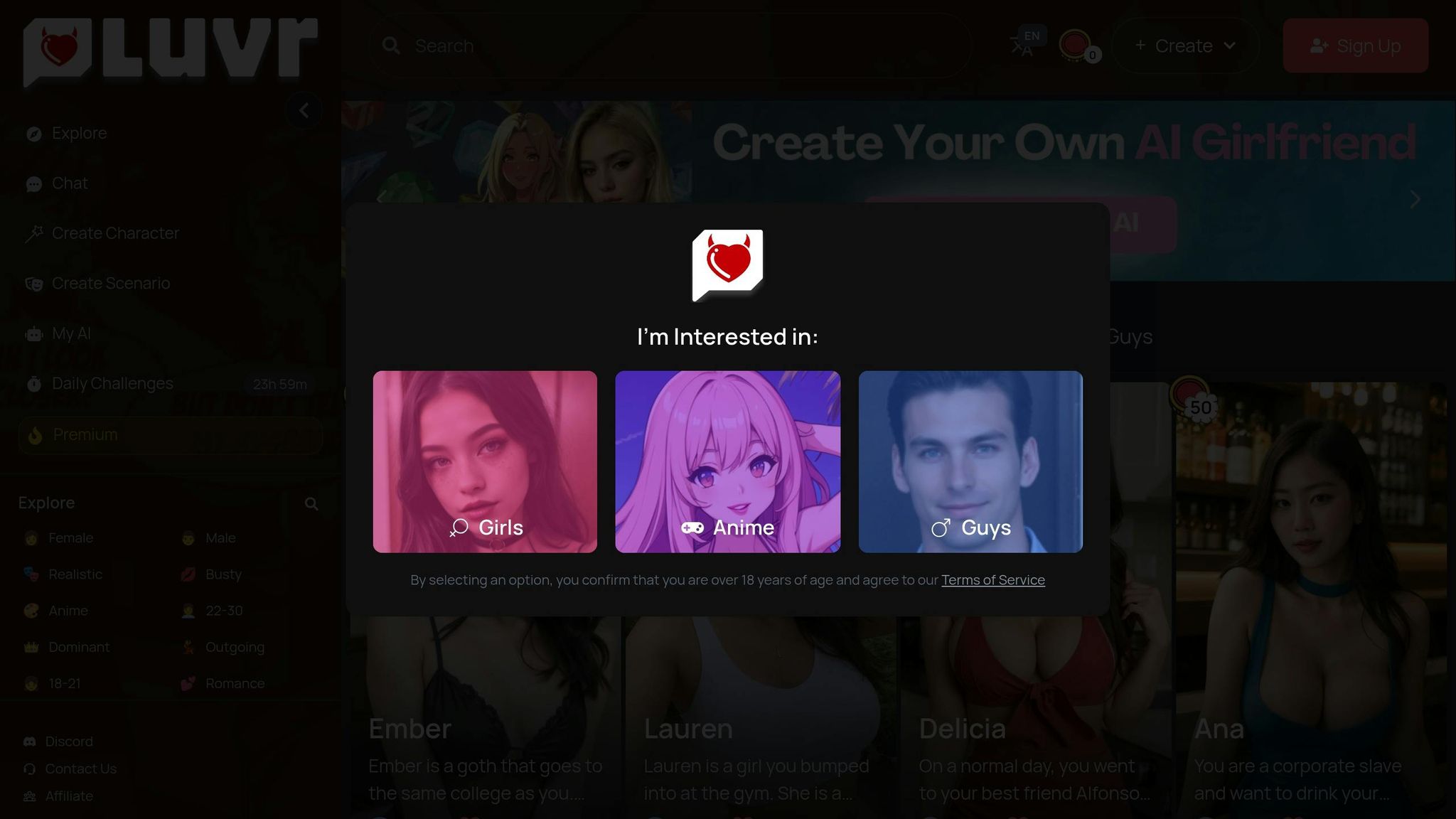

Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

AI Intimacy and Global Regulations

AI companions are reshaping human relationships, offering emotional support and connection like never before. But their rapid rise brings complex challenges in global regulation.

Should Policymakers Regulate Human-AI Relationships?

Value Summary

- AI Companions: Platforms like Luvr AI let users create personalized AI relationships, including AI girlfriends, with features like text, image, and audio messaging.

- Market Growth: The Emotion AI market is set to grow from $3 billion in 2024 to $7 billion by 2029.

- Regulatory Challenges: The EU enforces strict rules like banning emotion-detecting AI in workplaces, while the U.S. takes a more flexible approach.

- Compliance Costs: Non-compliance with EU laws can result in fines as high as €35 million or 7% of global turnover.

Quick Overview

| Region | Key Regulations | Penalties |

|---|---|---|

| European Union | AI Act bans emotion detection in workplaces; GDPR mandates strict data protection | Fines up to €35M or 7% of global revenue |

| United States | No federal law; state-level acts like California’s AI Transparency Act | $5,000 daily fines for non-compliance |

Bridge

This article explores how platforms like Luvr AI navigate these evolving regulations to balance innovation with user safety.

sbb-itb-f07c5ff

1. Luvr AI Platform Standards

Luvr AI operates with strict self-regulation and compliance measures to keep pace with the changing landscape of AI intimacy regulations. Based in San Francisco, the platform follows local legal standards, with its most recent Terms and Conditions update published on January 15, 2024.

To ensure a safe environment, Luvr AI requires users to undergo thorough age verification. All users must be at least 18 years old, or meet the legal age requirement in their region. Providing false age information leads to immediate account termination.

The platform employs a multi-layered moderation system that combines advanced filtering technology with human oversight. This system actively scans user interactions and AI-generated content for potential violations. If flagged, the content undergoes manual review to ensure compliance with platform policies [11, 13].

"We are dedicated to maintaining a safe, respectful, and law-abiding online environment for all users." – Luvr AI

Luvr AI enforces a strict Blocked Content Policy, prohibiting activities such as illegal conduct, hate speech, discrimination, threats, violence, exploitation of minors, privacy violations, intellectual property theft, and impersonation. Users are held accountable for their interactions, ensuring all engagement aligns with legal and ethical standards.

Recognizing the more stringent regulations in the European Union, Luvr AI includes specific clauses in its Terms and Conditions to ensure EU users benefit from their local legal protections. By adapting its policies to meet regional requirements, the platform demonstrates its ability to navigate complex regulatory environments.

Enforcement measures include content removal, account suspension, and, in severe cases, legal action. Users can report violations through the contact section, and repeat offenders may face permanent account termination for breaching community guidelines.

On the topic of privacy, Luvr AI requires all users to agree to a privacy policy designed to meet the standards of regulations like the EU's GDPR. This ensures that user interactions remain confidential and secure [10, 11]. By implementing such a robust framework, Luvr AI positions itself as a forward-thinking example in the rapidly evolving world of AI regulation.

2. European Union AI Rules

The European Union has laid out a strict regulatory framework governing AI's emotional interactions through two major laws: the EU AI Act and the General Data Protection Regulation (GDPR). These laws demand rigorous compliance, creating a challenging landscape for platforms like Luvr AI that operate in European markets. While the AI Act introduces guidelines on the ethical use of AI, the GDPR focuses on data protection, making compliance even more complex.

Prohibited AI Systems

Starting February 2, 2025, the EU AI Act’s Article 5(7)(f) will prohibit AI systems from inferring emotions in workplaces and educational settings - unless it's for medical or safety purposes. Additionally, the Act bans systems that use subliminal or manipulative tactics to significantly alter behavior, particularly when such tactics undermine informed decision-making or cause harm. The term "workplace" is broadly interpreted to include both physical and virtual environments where employees perform tasks, as well as spaces involving recruitment processes.

High-Risk AI Systems

For AI systems that are not outright banned, the EU classifies emotional AI as high-risk under the AI Act. These systems must adhere to strict oversight requirements, including effective human supervision during their operation. The GDPR complements these measures by mandating robust data protection practices for such platforms. For companies like Luvr AI, meeting these standards is essential to remain compliant in the European market.

GDPR Requirements for AI Intimacy Platforms

Under the GDPR, platforms handling personal data for emotional AI must establish a solid legal basis, often through explicit user consent. Key requirements include:

- Collecting only the data that is absolutely necessary

- Using data strictly for the purposes communicated to users

- Providing clear and transparent information about data processing practices

Financial Penalties and Market Impact

Failing to comply with the EU AI Act can result in severe penalties, including fines of up to €35 million or 7% of global turnover. Similarly, GDPR violations carry penalties of up to €20 million or 4% of global turnover.

Enforcement examples highlight the gravity of these rules. In 2019, the French Data Protection Authority (CNIL) fined Google €50 million for failing to meet GDPR standards on transparency and consent. Likewise, the Italian Data Protection Authority penalized Clearview AI €20 million for unauthorized personal data collection that violated GDPR principles like data minimization and transparency. With the Emotion AI market expected to grow from $3 billion in 2024 to $7 billion by 2029, compliance is becoming increasingly critical for platforms aiming to thrive in Europe.

Compliance Challenges

Navigating the combined demands of the EU AI Act and GDPR poses significant challenges for AI intimacy platforms, especially given the sensitive and extensive nature of the data they handle. To comply, platforms must conduct Data Protection Impact Assessments (DPIAs) for high-risk applications and implement strong technical and organizational safeguards. These measures ensure user rights - like access to data, rectification, erasure, and objection to automated decision-making - are upheld.

Maintaining compliance also requires ongoing efforts, including regular risk assessments, staff training, and staying updated on best practices for developing and deploying emotional AI systems. In a fast-evolving global market, platforms must adopt flexible strategies to keep pace with regulatory changes and ensure they meet these stringent requirements.

Benefits and Drawbacks

The global landscape of AI intimacy regulations brings a mix of opportunities and challenges for platforms like Luvr AI.

Advantages of Stricter Regulation

Building User Trust and Ensuring Safety

Tighter regulations can enhance transparency, safeguard user data, and minimize bias. For Luvr AI, adhering to these standards can strengthen user trust by demonstrating accountability and transparency, which encourages adoption and long-term loyalty.

Gaining a Competitive Edge Through Compliance

Successfully navigating strict regulatory requirements can position companies as trustworthy leaders in the industry. According to the National Association of Corporate Directors’ 2025 Trends and Priorities Survey, 30% of corporate directors expect artificial intelligence to be a top business priority by 2025. This growing focus on AI governance at the executive level opens doors for enterprise partnerships and institutional investments, giving compliant platforms an edge.

However, these benefits are offset by the operational hurdles that overly stringent regulations can create.

Drawbacks of Over-Regulation

Stifling Innovation and Raising Market Barriers

Excessive regulations can drive up operational costs, slow down product development, and limit feature offerings. The financial burden of compliance can be particularly challenging for startups, as Peter Guagenti, CEO of Integrail, explains:

"The EU AI Act, like much of the proposed regulation, is overly broad and burdensome to the startups that represent the biggest opportunity for positive economic and social benefits from AI."

– Peter Guagenti

For emerging platforms in the AI intimacy space, these costs can hinder creativity and favor larger, more established companies.

Operational Challenges

Finding skilled personnel to meet regulatory demands adds another layer of difficulty. A survey by Swimlane and Sapio Research found that 44% of cybersecurity decision-makers struggle to find and retain AI expertise, and only 40% believe their organizations have invested enough to comply with current cybersecurity regulations. This talent shortage complicates efforts to meet compliance standards, particularly for smaller companies.

Benefits of Flexible Regulatory Approaches

Encouraging Innovation and Quick Adaptation

Less restrictive regulatory environments create room for innovation by minimizing bureaucratic obstacles. For instance, the U.S., which currently leans on voluntary guidelines rather than comprehensive legislation, gives platforms the flexibility to evolve and enhance their services more quickly.

"Clear rules help businesses operate with confidence, but if regulations become too restrictive, they might push great, worthy research elsewhere."

– Sarah Choudhary, CEO of ICE Innovations

This flexibility allows developers to adapt rapidly and continuously improve user experiences. However, even with flexible approaches, the challenge of navigating international regulatory differences remains.

Challenges of Inconsistent Global Standards

Complexities of Cross-Border Operations

Operating internationally becomes increasingly complicated due to fragmented global standards. With 127 countries proposing AI legislation, platforms must manage a variety of definitions, legal frameworks, and compliance obligations. Often, companies adopt the strictest standards to ensure compliance across all regions. Additionally, overlapping regulations in areas like intellectual property, antitrust, and data protection further complicate operations.

Strategic Adjustments for Global Compliance

To succeed, international businesses must conduct thorough risk assessments aligned with the highest regulatory benchmarks and develop adaptable governance frameworks to meet diverse requirements. While this approach increases costs and complexity, it also pushes platforms toward creating more robust and universally compliant systems. For companies like Luvr AI, balancing innovation with responsible development while staying agile in the face of evolving regulations will be key to thriving in this complex environment.

Conclusion

The evolving regulatory environment for AI intimacy platforms reveals a clear divide between rigid oversight and more adaptable market practices. The European Union's AI Act stands out as one of the most comprehensive AI regulations globally, introducing a risk-based framework with steep penalties for non-compliance in prohibited AI systems. On the other hand, the United States takes a more fragmented approach, blending federal guidelines, state-level laws, and industry-specific rules. This contrast highlights the fundamental difference between the U.S.'s market-driven flexibility and the EU's stricter regulatory stance.

For platforms like Luvr AI, these differences create both opportunities and challenges. For instance, while the EU bans emotion-detecting AI in workplace settings (with a few safety-related exceptions), the U.S. imposes no such sweeping restrictions. However, individual states are stepping in to address regulatory gaps. A prime example is California's AI Transparency Act, which will take effect in January 2026. This law mandates that AI systems with over 1 million monthly users in California implement detection tools and disclose content origins. Non-compliance could result in $5,000 daily fines. These contrasting regulatory strategies reflect broader implications for global AI governance.

The economic potential of emotional AI cannot be ignored, with its market projected to reach $13.8 billion by 2032. Additionally, AI-related legislation is rapidly expanding. According to Stanford University's 2023 AI Index, the number of AI-related laws has grown from a single bill in 2016 to 37 bills across 127 countries by 2022. This rapid legislative growth indicates that platforms operating internationally may need to align with the strictest global regulations to maintain market access.

"By developing a strong regulatory framework based on human rights and fundamental values, the EU can develop an AI ecosystem that benefits everyone." - European Commission

As the balance between innovation and protection continues to evolve, the future of AI-driven emotional interactions will likely be shaped by this regulatory race. Platforms that prioritize strong governance and collaborate with policymakers will be better equipped to navigate these complexities. Companies that succeed in blending technological progress with ethical responsibility will play a central role in shaping the industry's trajectory.

FAQs

How does Luvr AI comply with different regional regulations, like those in the EU and the US, regarding AI emotional interaction?

Luvr AI aligns its operations with local regulations by customizing its practices to meet the legal standards of each region. For instance, in the European Union, where the AI Act enforces strict guidelines on emotion recognition technologies, the platform likely prioritizes measures like stronger data privacy safeguards and comprehensive risk evaluations. Meanwhile, in the United States, where there’s no overarching federal AI law, compliance is guided by sector-specific rules and privacy frameworks that stress transparency and secure data management.

This tailored approach allows Luvr AI to navigate the distinct regulatory environments of different markets, ensuring its operations remain both responsible and compliant.

What are the risks and benefits of using AI companions under current global regulations?

Using AI companions within the framework of today's global regulations comes with both upsides and challenges.

On the positive side, regulations often enhance safety and transparency. Many rules require AI systems to clearly disclose their capabilities, helping to protect users from misleading interactions. In areas like mental health, ethical guidelines are designed to promote responsible use and prioritize user well-being.

But there are challenges too. Overly strict regulations can stifle innovation, while weak or poorly crafted rules might leave users exposed to risks like privacy violations, security breaches, or even emotional manipulation. Misuse of AI - such as inappropriate interactions or exploitation - further underscores the need for continuous updates and improvements to regulatory frameworks. Finding the right balance between encouraging progress and protecting users remains a critical goal.

How do regulatory differences between the EU and the US shape the future of AI intimacy technologies?

The differing regulatory strategies of the EU and the US are steering the growth of AI intimacy technologies in noticeably different directions. The EU's AI Act, with its emphasis on safety, transparency, and accountability, prioritizes ethical standards and user trust. However, this rigorous approach can also slow down the pace of innovation. On the other hand, the US adopts a more flexible and less centralized regulatory framework, which encourages faster innovation but may introduce concerns about safety and oversight.

For platforms like Luvr AI, this creates a complex global environment. In the US, looser regulations might allow for quicker rollout of new features. Meanwhile, the EU's stricter policies could lead to designs that are safer and more ethically sound. These contrasting approaches are likely to shape the future of AI intimacy technologies and their global adoption.