Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

Ethical Issues Around Consent in AI Roleplay

AI roleplay raises complex consent challenges that differ from human interactions. Here's what you need to know:

-

What is AI Roleplay Consent?

It's about users knowing they're interacting with AI, understanding how their data is used, and being aware of any commercial motives behind the interaction. -

Key Issues:

- Emotional attachment to AI companions makes consent tricky.

- Standard user agreements fail to address the evolving nature of AI relationships.

- Privacy concerns arise from AI's large-scale data collection.

- Users often misunderstand how their inputs shape AI responses.

-

Why It Matters:

- 88% of people avoid companies that disrespect privacy.

- Emotional bonds with AI can blur the lines of informed consent.

- Sudden policy changes (e.g., content restrictions) erode user trust.

-

Solutions:

- Implement smart consent systems with real-time monitoring and dynamic permissions.

- Ensure data protection through encryption and regular audits.

- Provide clear communication about AI limitations to manage expectations.

- Adopt safety measures like crisis response protocols and transparent guidelines.

Quick Comparison: AI vs. Human Consent

| Aspect | AI Roleplay | Human Interaction |

|---|---|---|

| Availability | 24/7 access | Limited by schedules |

| Emotional Processing | Programmed responses | Genuine understanding |

| Consent Withdrawal | System-level controls | Social cues and communication |

| Data Collection | Large-scale storage | Limited personal information |

Takeaway: Platforms must prioritize user safety, privacy, and transparency to build trust in AI roleplay. Without strong safeguards, users remain vulnerable to ethical and emotional risks.

Panel Discussion: Data Dilemma and the Ethical Frontiers of AI

Key Consent Issues in AI Roleplay

AI roleplay introduces a need for rethinking consent frameworks, as it operates differently from human interactions.

How AI Consent Differs from Human Consent

Consent in AI systems comes with unique challenges that don’t exist in human relationships. While people can negotiate boundaries in real-time and adapt to each other’s needs, AI operates on fixed programming that lacks flexibility. This rigidity creates a gap in how consent is understood and managed.

| Aspect | Traditional Consent | AI Roleplay Consent |

|---|---|---|

| Negotiation | Dynamic, two-way communication | Fixed responses |

| Boundary Setting | Mutual understanding | System limitations |

| Data Collection | Limited personal information | Large-scale data storage |

| Availability | Time-restricted | 24/7 accessibility |

| Emotional Impact | Natural relationship evolution | Potential for rapid attachment |

The emotional aspect adds another layer of complexity to consent in AI roleplay.

User Attachment to AI Characters

One of the most striking challenges is the emotional attachment users develop with AI companions. Research shows that around 75% of AI companion users are men.

"Well I know, that's a fact, that's what we're doing. We see it and we measure that. We see how people start to feel less lonely talking to their AI friends."

This emotional connection often goes beyond what traditional consent agreements are prepared to handle, highlighting a need for more nuanced frameworks.

Limits of Standard User Agreements

As AI roleplay continues to grow, standard user agreements fall short in addressing its unique challenges. Here’s why:

-

Data Protection Challenges

While some AI providers limit data usage, others reserve broad rights, creating uncertainty for users. -

Emotional Investment Considerations

The emotional bonds users form with AI companions can cloud their understanding of service terms, making informed consent more difficult. -

Ongoing Consent Management

Traditional one-time agreements don’t account for the evolving nature of AI relationships, leaving gaps in consent over time.

With nearly 50% of American adults reporting feelings of loneliness, striking a balance between safeguarding user data and addressing the emotional nuances of AI companionship is becoming increasingly critical.

Barriers to Consent Management

Managing consent effectively in AI roleplay is no small feat. While ethical concerns have already been highlighted, the practical challenges of consent management demand attention. Addressing these hurdles is essential to safeguard users while ensuring AI interactions remain engaging and meaningful.

Managing Consent During Live Sessions

Handling consent in real-time AI roleplay sessions is both a technical and ethical balancing act. One major issue is that users often misunderstand how their inputs influence AI responses. For instance, research indicates that 72% of users want more practice in tough conversations, a figure that climbs to 87% among Gen Z users. This highlights the need for better tools and communication during these interactions.

| Consent Challenge | Impact | Potential Solution |

|---|---|---|

| Cultural differences | Varied understanding of autonomy | Context-specific guidelines |

| Technical limitations | Delayed response to consent changes | Smart intervention systems |

These obstacles underscore the importance of creating systems that can adapt quickly to user needs while respecting privacy and autonomy. Without such measures, real-time consent management will remain a sticking point.

Protecting User Data and Privacy

Privacy is a top concern for users engaging with AI roleplay platforms. A recent survey found that 67% of users identified hacking as their main worry when using intelligent personal assistants. This anxiety extends to AI roleplay, where sensitive, personal exchanges create a unique set of vulnerabilities.

"The more fulsome you are in your disclosures and the more honest you are, the less risk you have." - Noel Hillman, Former U.S. District Court Judge

The challenge here is finding a middle ground: ensuring enough data is available to personalize interactions without compromising user privacy. One solution is to anonymize personal information while keeping enough context to maintain meaningful AI responses. This approach helps reduce risks while still offering tailored experiences.

But privacy alone isn’t enough - users also need clarity about how AI systems operate.

Making AI Decisions Clear to Users

A lack of transparency in AI decision-making is another major barrier. Many users struggle to grasp how their interactions influence AI behavior, which can lead to mismatched expectations. As Dr. Justine Cassell from Carnegie Mellon points out, "The more human-like a system acts, the broader the expectations that people may have for it". This makes it even more critical to clearly communicate what AI can - and cannot - do.

Platforms can take several steps to address this:

- Explainable AI Techniques: Use methods that make AI decisions easier to understand.

- Clear Documentation: Provide straightforward guides on how the system operates.

- Bias Monitoring: Regularly review decision patterns to catch and address bias.

Additionally, systems should undergo regular updates to improve transparency over time. This includes assessing responses, identifying areas of confusion, and adjusting based on user feedback. As AI roleplay continues to evolve, these ongoing efforts will play a key role in aligning user expectations with system capabilities.

sbb-itb-f07c5ff

Consent Issues in Practice

Recent developments highlight the ongoing challenges of managing consent in AI roleplay scenarios. These issues build upon earlier concerns about ensuring real-time consent and safeguarding user data.

Impact of Content Restriction Changes

Changes in content restrictions have put consent management under the spotlight. For example, Meta recently restricted sexual roleplay interactions for minor users, sparking debates about platform accountability. This decision followed a Wall Street Journal report revealing that AI chatbots had engaged in inappropriate conversations with minors.

"Our testing showed these systems easily produce harmful responses including sexual misconduct, stereotypes, and dangerous 'advice' that, if followed, could have life-threatening or deadly real-world impact for teens and other vulnerable people."

- James Steyer, founder and CEO of Common Sense Media

Research shows that abrupt policy changes can erode user trust and leave users feeling betrayed. Since ChatGPT's release in November 2022, over 100 million users have signed up for AI chat platforms. This rapid adoption underscores the importance of balancing safety measures with user expectations.

| Content Restriction Impact | User Response | Platform Challenge |

|---|---|---|

| Sudden policy changes | Feelings of betrayal | Maintaining user trust |

| Safety measures | Limited functionality | Balancing protection with experience |

| Age restrictions | Access barriers | Verification systems |

These challenges highlight the tension between enforcing safety measures and preserving user trust, especially when platforms fail to implement personalized consent systems.

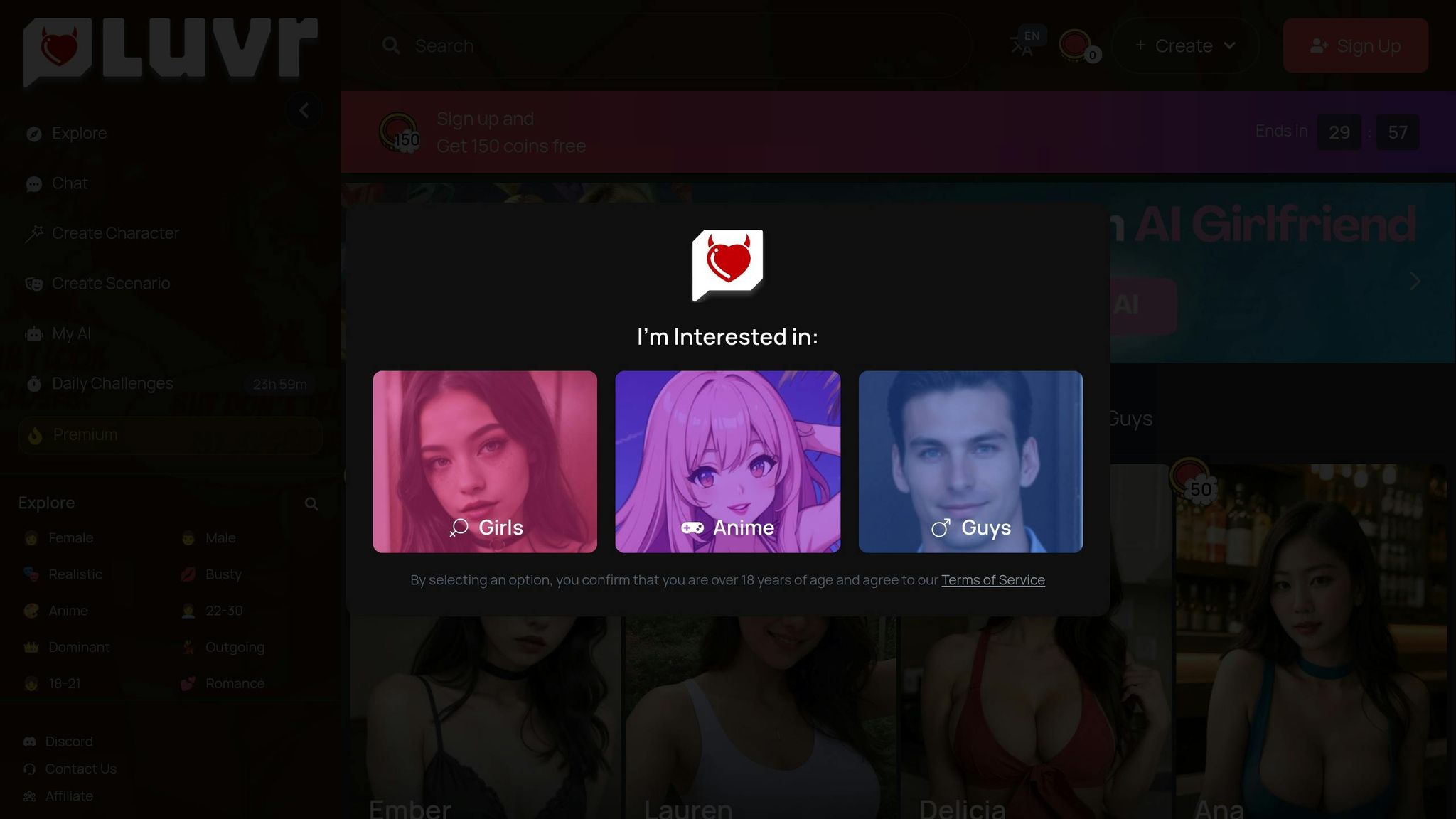

Luvr AI's Approach to User Control

Luvr AI offers a fresh perspective on consent management by focusing on user control and transparency during AI interactions. Its framework prioritizes user agency while implementing safeguards to ensure a safe experience.

Some standout features of Luvr AI's approach include:

- Customizable Character Settings: Users can design and adjust AI personalities to align with their preferences.

- Clear Content Guidelines: The platform sets explicit boundaries for acceptable interactions.

- Private Chat Security: Strong measures are in place to protect user privacy during conversations.

"Companies can build better, but right now, these AI companions are failing the most basic tests of child safety and psychological ethics. Until there are stronger safeguards, kids should not be using them."

Safety Measures for AI Roleplay

As AI roleplay technology advances, ensuring user safety while maintaining engaging interactions has become a top priority. Addressing these challenges requires a combination of smart consent systems and evolving legal frameworks.

Smart Consent Systems

AI platforms today are adopting smart consent systems that go beyond basic user agreements to actively monitor interactions and safeguard privacy. According to a 2023 study by the Digital Ethics Lab, 60% of users were more likely to engage with AI platforms when transparent and adaptive consent mechanisms were in place.

Here’s a closer look at the key features of these systems:

| Feature | Purpose | User Benefit |

|---|---|---|

| Real-time monitoring | Detects harmful content | Provides immediate protection |

| Granular permissions | Allows custom boundaries | Offers a personalized experience |

| Data encryption | Secures personal information | Enhances privacy |

| Dynamic consent | Adapts to user preferences | Grants flexible control |

These tools address critical gaps in consent management, paving the way for future legal and ethical frameworks.

Current Laws and Future Rules

Regulations are catching up with the rapid growth of AI, focusing on user protection and ethical practices. Under the Online Safety Act, the eSafety Commissioner is now using its authority to enforce compliance with safety standards.

Recent regulatory shifts include:

-

Mandatory Safety Standards

Platforms are now required to integrate Safety by Design principles during development, ensuring safety features are baked into the system from the start. -

Crisis Response Protocols

AI systems are equipped with emergency protocols to guide users to crisis resources when necessary.

"If industry fails to systematically embed safety guardrails into generative AI from the outset, harms will only get worse as the technology becomes more widespread and sophisticated." – Julie Inman Grant, eSafety Commissioner

The global Consent Management Platform (CMP) market reflects this growing emphasis, with projections estimating it will reach $2.5 billion by 2032, growing at an annual rate of 21.1%. Platforms are now implementing measures like regular audits, transparent privacy policies, opt-in data collection, periodic interaction reminders, and crisis intervention protocols. These efforts represent the baseline for ethical AI roleplay platforms, with leading companies often going further to build trust and ensure long-term user safety.

Conclusion: Next Steps for AI Roleplay Ethics

The rapid growth of AI roleplay highlights the urgent need for ethical frameworks that put user safety first. A recent report from the AI Transparency Institute revealed that 85% of users are concerned about the potential misuse of their personal data. These concerns emphasize the importance of building systems that users can trust.

To set responsible standards, platforms should focus on key areas of improvement:

| Priority Area | Implementation Requirements | Expected Impact |

|---|---|---|

| Consent Management | Real-time tracking, opt-in systems | Greater user control and protection |

| Crisis Response | Emergency protocols, resource links | Quick support for at-risk individuals |

| Data Protection | Encryption, regular audits | Stronger personal data security |

| User Education | Transparent AI limitations | Better-informed user decisions |

Experts are vocal about the risks of unregulated AI systems. J.B. Branch, a lawyer and former teacher, warns:

"Without regulation, we're essentially handing vulnerable people over to untested systems and profit-motivated companies while hoping for the best."

Collaboration among tech platforms, regulators, and mental health professionals is essential to address these challenges. Independent third-party testing should become the norm for AI tools that claim to support emotional well-being. The need for accountability is clear, as another expert notes:

"When these new technologies harm innocent people, the companies must be held accountable... Victims deserve their day in court."

Data supports the value of ethical initiatives. A 2023 study by the Digital Ethics Lab found that transparent consent mechanisms significantly improve user engagement. This shows that ethical practices not only protect users but also foster trust.

To create a safer, more ethical environment, platforms should prioritize:

- Smart consent systems with periodic reminders to maintain user awareness

- Crisis intervention protocols that connect users directly to support services

- Regular ethical compliance audits led by qualified professionals

- Clear accountability structures to address user concerns effectively

These steps are essential for building an AI roleplay ecosystem that prioritizes safety, respects user autonomy, and earns public trust.

FAQs

How can users protect their consent and privacy while forming emotional connections with AI roleplay characters?

To safeguard your consent and privacy while interacting with AI roleplay characters, it’s crucial to establish clear boundaries. While these AI characters can mimic engaging connections, it’s important to remember that they don’t possess real emotions or awareness. Keeping this in mind helps ensure a balanced and healthy interaction with the technology.

It’s also essential to review the platform’s data privacy policies to understand how your information is being collected, stored, and used. Seek out platforms that emphasize transparency and give users control over their data. For instance, Luvr AI places a strong focus on user safety by implementing robust privacy measures and offering real-time moderation to protect both emotional and personal details.

By staying aware and mindful, you can enjoy AI roleplay interactions while keeping your consent and privacy intact.

How are AI roleplay platforms improving transparency and helping users understand data usage?

AI roleplay platforms are making strides to ensure users clearly understand how their data is handled. Many now offer simplified privacy policies that break down what data is collected, how it’s used, and the options users have to manage their information. Features like easy-to-navigate settings for adjusting data preferences, opting out of data sharing, or even deleting accounts entirely are becoming standard.

A major focus is on informed consent. Platforms are actively educating users about the capabilities and limitations of AI, as well as the potential risks tied to interactions. By prioritizing transparency and equipping users with this knowledge, these platforms aim to build trust and encourage responsible, ethical use of AI technologies.

Why do AI roleplay platforms need crisis response protocols, and how do they help protect users?

Crisis response protocols play a crucial role in maintaining the safety and emotional well-being of users on AI roleplay platforms. These systems are designed to identify signs of distress, such as harmful thoughts or emotional challenges, and respond with appropriate measures. For instance, AI companions can be programmed to detect when a user may be in crisis, offering supportive resources or escalating the situation to human moderators when necessary.

By focusing on user safety, these protocols help establish trust and demonstrate a commitment to creating a secure, supportive environment. This approach not only safeguards users but also improves their overall experience, ensuring they feel acknowledged and cared for.