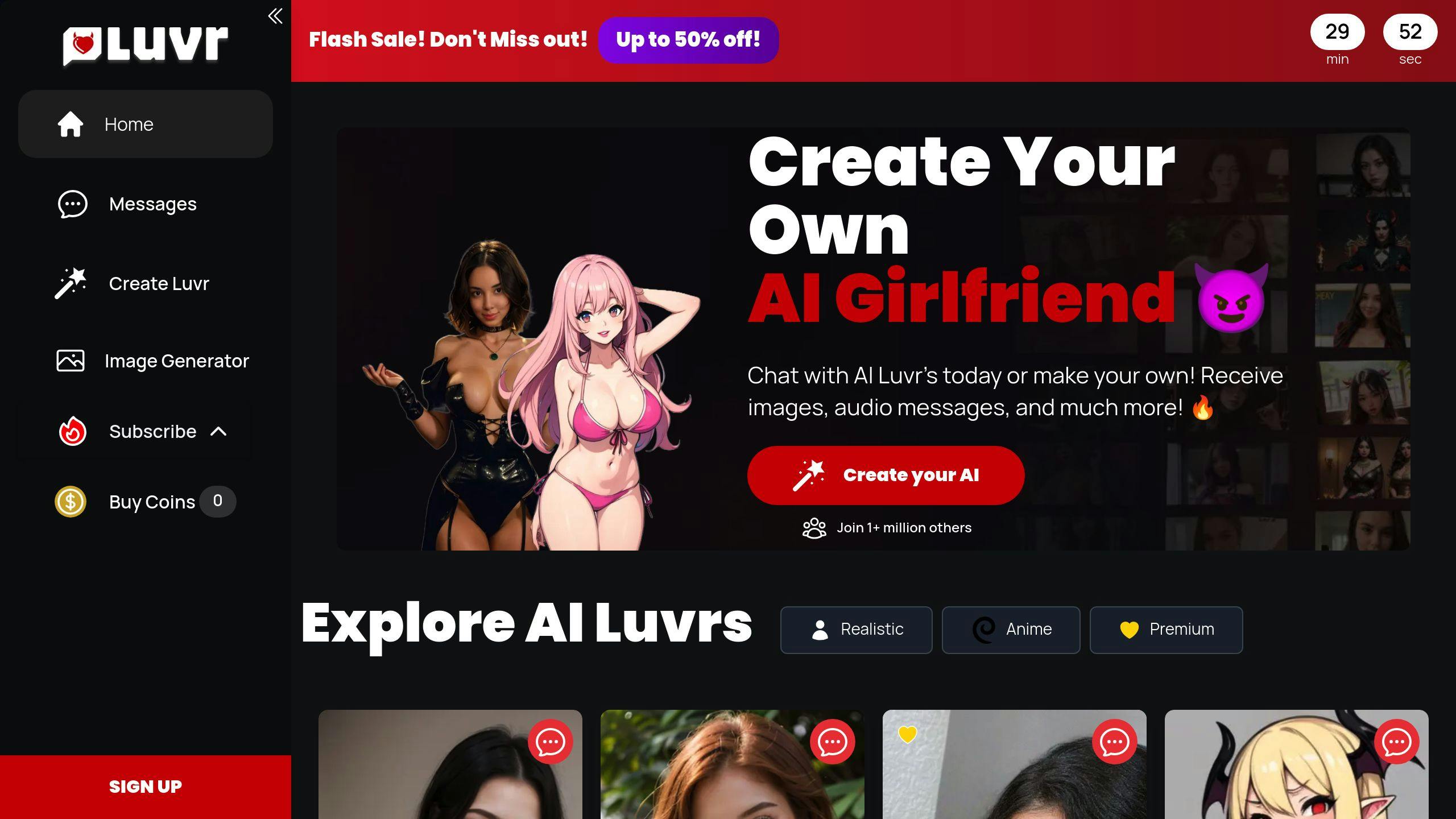

Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

Global AI Emotional Impact: 2024 Study

AI companions are becoming a big part of emotional support, helping people by reading emotions through facial expressions, voice, and text. Here's what the 2024 study reveals:

- Mental Health Support: AI tools like Luvr AI and Kora fill gaps where human help is unavailable. 32% of people are open to trying AI therapists.

- Global Perspectives: Acceptance varies by region:

- East Asia: Positive due to tech-forward culture.

- North America: Warm response due to lack of therapists.

- Europe: Mixed, with privacy concerns.

- Developing Markets: Growing interest due to limited mental health access.

- Benefits: AI companions provide non-judgmental listening, emotional support, and social practice.

- Risks: Overattachment, privacy concerns, and confusing AI with real human connections.

Quick Comparison of Regional Adoption

| Region | Drivers of Adoption | General Sentiment |

|---|---|---|

| East Asia | Tech-friendly, group-focused | Very positive |

| North America | Therapist shortage, tech-savvy | Positive |

| Europe | Privacy-focused | Mixed |

| Developing Markets | Limited mental health access | Growing positivity |

The study highlights the need for ethical development, cultural sensitivity, and privacy protection as these tools grow. AI companions are reshaping emotional landscapes, but they must enhance - not replace - human connections.

Related video from YouTube

Global Views on AI Emotional Companions

Different parts of the world view AI emotional companions differently, shaped by their local culture, tech landscape, and social values. New data points to more people warming up to AI-powered emotional support, especially in tech-savvy regions. Let's look at how different places approach these digital companions.

Regional Differences in Acceptance

The way people feel about AI companions isn't the same everywhere - it depends on what they need, what tech they can access, and what their society thinks is normal. Take Luvr AI, for example. They've figured out how to make their AI companions work for different cultures by tweaking how the AI talks and behaves. It's like having a friend who knows just how to act in any social situation.

"The integration of AI in mental health care must be carefully balanced with cultural sensitivities and local healthcare practices to ensure effective adoption across different regions." - Oliver Wyman Forum Survey, 2024

Here's what we're seeing around the world:

| Region | What Drives Adoption | How People Feel About It |

|---|---|---|

| East Asia | Tech-loving culture, group-focused society | Very positive |

| North America | Not enough therapists, tech-smart population | Pretty positive |

| Europe | Big on privacy, lots of rules | Mixed feelings |

| Developing Markets | Hard to find mental health help | Getting more positive |

Example: Luvr AI

People are really taking to Luvr AI's personal approach, even though some worry about the ethics. It's like walking a tightrope - you need to balance what tech can do with what it should do. The big question is: How do we fit these AI companions into different societies in a way that helps people without replacing human connections? These AI friends could fill gaps in emotional support, but they need to be introduced thoughtfully and with respect for local customs.

sbb-itb-f07c5ff

Emotional and Psychological Effects

The 2024 study shows how AI companions affect our daily lives - both helping and potentially causing problems.

Positive Effects of AI Companionship

People using AI companions are seeing real benefits for their mental health. Take the EVI platform's chatbot Kora - it shows how AI can step in when someone needs emotional support right away. These AI friends can spot patterns in how you're feeling and suggest ways to cope, helping fill gaps when human support isn't available.

"Advances in emotional AI have transformed these platforms into tools for human interaction, addressing the innate need for intimacy." - From the social and ethical impact study

Here's what researchers found about AI companions:

| What They Help With | How They Help |

|---|---|

| Mental Health | Always there when you need them |

| Sharing Feelings | No judgment, just listening |

| Social Practice | Safe space to work on people skills |

But it's not all smooth sailing - there are some bumps in the road we need to watch out for.

Risks and Concerns

The study points out some red flags we can't ignore. The biggest worry? Making sure people don't blur the lines between AI friends and real human connections.

Here's what keeps researchers up at night:

- Getting Too Attached: When your AI friend is always there with the perfect response, it might be hard to step away

- Keeping Secrets Safe: Your emotional chats with AI need strong protection - just like any private conversation

- Keeping It Real: There's a risk of mixing up AI responses with genuine human feelings

The research team says we need solid rules to make sure AI companions help boost our relationships with real people - not replace them.

Ethics and Future Developments

Ethical Challenges

The emotional AI market is booming - set to jump from $3.745 billion in 2024 to $7.003 billion by 2029. But with this growth comes big questions about responsibility. In 2024, the biggest worries center on keeping data safe and making sure emotional data isn't misused. Companies building these AI companions need to strike the right balance between pushing boundaries and protecting users.

Privacy has shot to the top of the priority list, especially now that these platforms collect such personal emotional information. What's acceptable in one country might raise eyebrows in another - that's why developers need to think carefully about how different cultures view AI emotional interactions.

"The integration of emotional AI across healthcare and entertainment sectors demands a new framework for ethical development. We must ensure these systems enhance rather than exploit human emotional connections." - From the 2024 Global AI Impact Study

These ethical issues keep popping up as the technology gets better and better. Let's look at what's coming next.

Future Trends and Research Areas

AI emotional companions are changing fast. Right now, we're seeing big steps forward in how these systems learn your personal emotional patterns and keep your data safe through blockchain tech. But it's not just about the tech - different cultures have different ways of showing and handling emotions, and that's shaping how these systems grow.

Scientists are zeroing in on three main areas:

- Keeping emotional data private: Building better ways to protect the personal emotional information these systems collect

- Getting emotions right across cultures: Teaching AI to pick up on how different cultures express feelings - from subtle communication differences to relationship customs

- Understanding the long game: Looking at how spending lots of time with AI companions affects our human relationships

Getting better at reading emotions accurately is crucial - if these systems mess up emotional cues, users lose trust. That's especially tricky when you're dealing with different cultural backgrounds.

Conclusion

The 2024 study shows how AI companions are reshaping our emotional landscape. The numbers tell an interesting story: the market's set to jump from $3.745 billion in 2024 to $7.003 billion by 2029. But it's not just about the money - these AI friends are making their mark in mental health support and customer service.

Here's what's really catching everyone's attention: different cultures have different takes on AI companions. Some places can't get enough of them, while others are taking it slow. It's like introducing a new friend to different friend groups - some hit it off right away, others need time to warm up.

The rise of personalized AI interactions brings up some pretty big questions. Sure, they're getting better at connecting with us, but we need to think about what this means for our human relationships. It's like having a GPS for emotions - helpful, but should it replace our natural sense of direction?

What developers and researchers should focus on:

- Keep it private: Lock down that emotional data like it's Fort Knox. Blockchain could be your best friend here.

- Mind the culture gap: Make sure these AI systems can read the room in Tokyo just as well as they can in Toronto.

- Set clear boundaries: These AI companions should be like good backup singers - supporting the main act (human connections), not stealing the show.

The trick is to keep pushing the tech forward while making sure we're not losing touch with what makes us human. Think of it as building a bridge - you need solid engineering, but you also need to make sure people feel safe crossing it.