Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

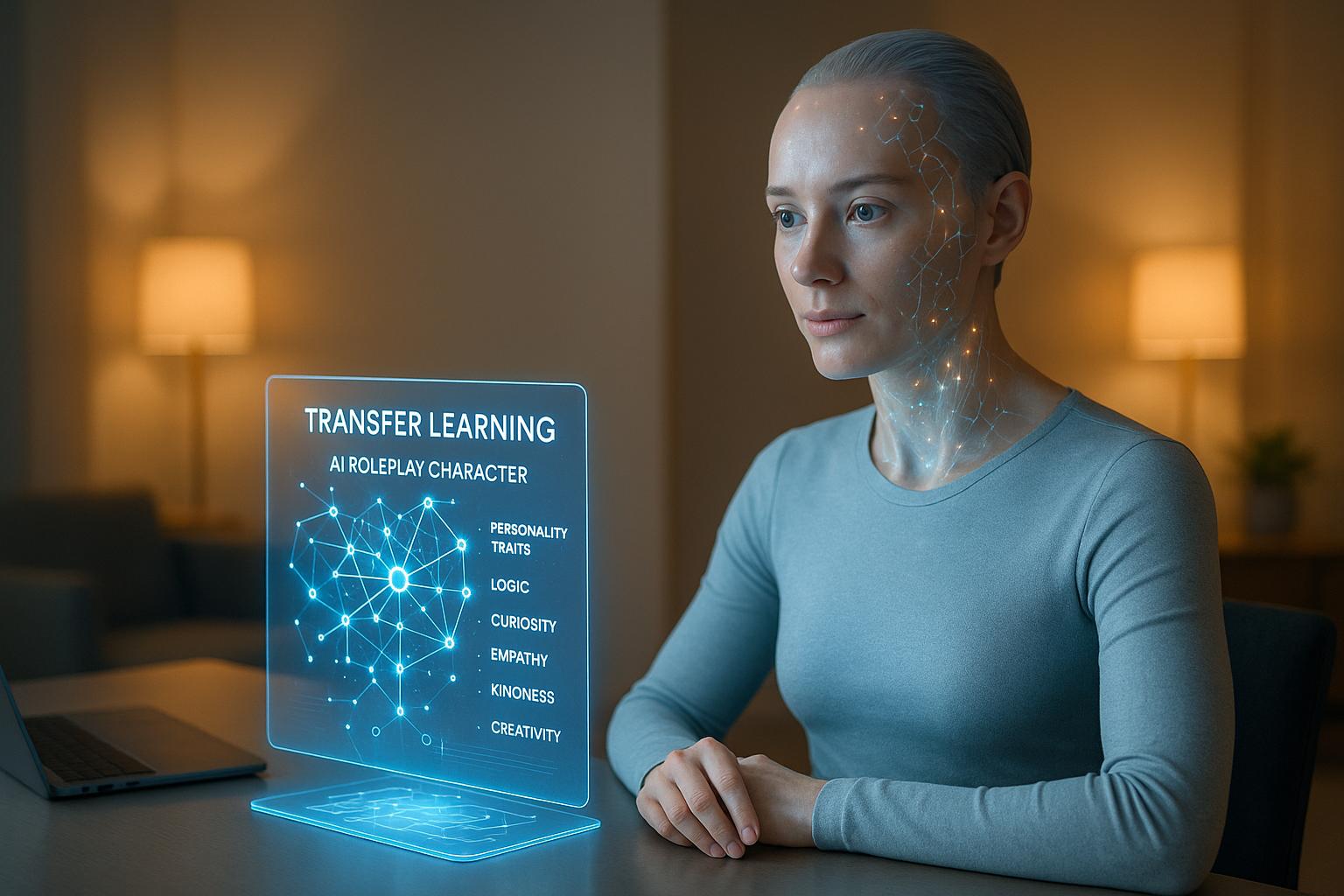

Transfer Learning for AI Roleplay Characters

AI roleplay characters are getting smarter and more lifelike - and transfer learning is the key. This technique allows developers to take pre-trained models and fine-tune them for creating personalized, responsive AI companions without starting from scratch. Here’s what you need to know:

- What is Transfer Learning? It’s like teaching an AI new tricks by building on what it already knows, saving time and resources.

- Why It Matters: Platforms like Luvr AI use it to create characters that feel natural, remember past interactions, and adapt to user preferences.

- How It Works: Developers fine-tune models with specific traits, use user feedback to improve, and ensure consistency while allowing characters to evolve.

- Challenges: Balancing personalization, maintaining consistency, and avoiding overfitting with small datasets.

- Benefits: Faster development, lower costs, and better performance even with limited data.

Transfer learning is transforming how AI characters are developed, making them more engaging and tailored to individual users. Let’s dive into how this works and what’s next for this technology.

Uncover the Secrets of Pre-Trained Models and Transfer Learning in 60 Minutes!

How Transfer Learning Works for AI Personality Development

Transfer learning transforms pre-trained language models into unique AI personalities by fine-tuning their general language capabilities to reflect distinct traits.

Building Personality Traits from Pre-Trained Models

Pre-trained language models act as the backbone for creating AI personalities, leveraging their deep understanding of language patterns and conversational dynamics. By refining these models, developers can craft personalities that range from cheerful and outgoing to reflective and reserved.

Techniques like masked language modeling, next sentence prediction, and sentiment analysis are used to fine-tune these models, tailoring them to express specific traits. Research reveals that such fine-tuning can improve performance metrics, with accuracy increasing by over 3% in some cases.

For platforms like Luvr AI, this process allows users to design AI companions with distinct personalities. Whether someone seeks an energetic conversationalist or a thoughtful confidant, the system adjusts classification head layers in the pre-trained models to capture the subtle nuances that define each personality.

Using User Feedback to Improve AI Characters

User feedback plays a vital role in refining AI personalities, creating a continuous improvement loop.

Reinforcement Learning from Human Feedback (RLHF) integrates user preferences directly into the training process. For example, research highlights that "Love Reactions" - a simple yet powerful feedback mechanism - showed a strong positive correlation with user retention. Approximately 0.1% of model-generated messages received such reactions, underscoring their importance.

A notable example of this approach is Anthropic’s AI assistant, Claude. Its training incorporates human feedback through a constitutional framework, allowing the AI to adjust its behavior while adhering to ethical guidelines.

Platforms like Luvr AI rely heavily on these feedback-driven refinements, ensuring that user interactions continuously shape and evolve AI companions. As Saurav Agarwal, an Architecture & Engineering Leader, advises:

"Fine-tuning generative AI with feedback is like perfecting a recipe. Start with a clear end goal. Prioritize quality feedback over sheer volume. Use continuous feedback loops: train, adjust, and repeat. Ensure your feedback sources are diverse, capturing varied perspectives."

This iterative process ensures that AI personalities remain consistent while adapting to user needs.

Keeping Characters Consistent While Allowing Change

One of the biggest challenges in AI personality development is ensuring that characters stay true to their established traits while evolving over time. Users expect their AI companions to remember past interactions and maintain a sense of continuity.

Continual learning addresses this by updating models with new data while preserving previously learned knowledge. This prevents significant performance drops and ensures that characters remain dynamic yet consistent. Techniques like Elastic Weight Consolidation (EWC) are particularly effective in avoiding "catastrophic forgetting" when models learn new tasks.

To maintain this balance, robust evaluation methods that use multiple metrics are essential. These methods ensure that updates preserve the core personality traits while allowing for natural adaptation. For platforms like Luvr AI, this balance is key to fostering lasting relationships between users and their AI companions.

Steps to Apply Transfer Learning in AI Characters

Creating AI roleplay characters with transfer learning involves transforming general language models into engaging, personalized companions. This process requires several key steps, each designed to shape the AI into a responsive and dynamic personality.

Setting Up Training Data

The quality of your training data can make or break your AI character. It’s the foundation that determines how well the AI understands and replicates personality traits. Start by gathering relevant data - such as conversational transcripts, character dialogues, and user interaction logs. If possible, layer in real-time user analytics to refine the dataset further.

Before training begins, clean the data thoroughly. This means removing duplicates, addressing missing values, and discarding incomplete records. Labeling conversations accurately by tone, style, and personality traits is crucial. Doing so helps the AI learn to mimic specific patterns and behaviors effectively . To ensure the model performs well, split the data into training, validation, and testing sets. This step helps avoid overfitting and ensures the model remains robust.

For best results, aim for 10 to 15 high-quality conversation examples per personality trait. If resources are limited, even five examples can work, as long as the style and tone are consistent. Whether you’re designing a realistic, cartoonish, or anime-inspired personality, uniformity across examples is critical. Once the data is ready, the focus shifts to selecting the right training methods.

Training Methods for Custom Personalities

One of the most efficient techniques for customizing AI personalities is Low-Rank Adaptation (LoRA). This method modifies only a small subset of a pre-trained model’s parameters, making it possible to fine-tune personalities without requiring massive computational resources. For instance, LoRA significantly reduces the trainable parameters, memory usage, and checkpoint size of models like GPT-3. Moez Ali, founder of PyCaret, highlights its importance:

"LoRA emerges as an indispensable technique in addressing the substantial challenges posed by the size and complexity of large models."

LoRA’s efficiency shines during inference, where its lower-rank matrices are combined with pre-trained weights without introducing latency. This makes it ideal for platforms like Luvr AI, where users expect quick and seamless interactions.

Another powerful option is Reinforcement Learning from Human Feedback (RLHF). OpenAI’s InstructGPT, launched in January 2022, used RLHF to great effect. Human evaluators selected outputs that were helpful, non-offensive, and accurate, leading to better instruction-following, reduced toxicity, and improved truthfulness. For specialized applications, combining LoRA with prefix tuning allows for efficient fine-tuning using minimal data and computing power, making it easier to craft unique personality traits. After training, rigorous testing ensures the AI continues to improve with user input.

Testing and Improving Based on User Input

Once the AI character is trained, testing and refinement become ongoing priorities. Incorporate user feedback through surveys, in-app reporting tools, and ratings to identify areas for improvement. Advanced algorithms can process this feedback in real time, using natural language processing to detect trends and common issues in user interactions .

Focus on feedback that highlights recurring patterns rather than isolated comments. For example, if users frequently report that the AI struggles with understanding specific queries, such as order statuses, this signals an area that needs adjustment. Regular updates based on this feedback keep the AI aligned with user expectations. Additionally, keeping users informed about updates helps foster trust and transparency.

To refine responses further, reward model training can be used. This approach evaluates multiple response candidates and selects the highest-scoring one - commonly referred to as best-of-N or rejection sampling. Continuous monitoring and adaptation ensure the AI evolves to meet real-world challenges effectively. By combining structured data, training methods, and user feedback, AI roleplay characters can grow into highly engaging and responsive companions.

sbb-itb-f07c5ff

Benefits and Drawbacks of Transfer Learning in Roleplay AI

Transfer learning simplifies the development of AI roleplay characters by reusing pre-trained models. As discussed earlier in the context of personality development, the strengths and weaknesses of transfer learning revolve around finding the right balance between adaptability and consistency.

Pros and Cons Comparison

| Benefits | Drawbacks |

|---|---|

| Reduced Development Costs: Saves on computational resources by reusing pre-trained models, cutting down on training time, data needs, and processing power | Domain Mismatch Issues: Major differences between the source and target data can limit how well knowledge transfers |

| Faster Time to Market: Speeds up the training process for new models, allowing quicker deployment | Layer Selection Complexity: Deciding which layers to transfer is tricky - early layers capture general features, while deeper layers focus on task-specific details |

| Improved Performance with Limited Data: Boosts results on new tasks even when training data is scarce | Overfitting Risks: Small target datasets, especially with imbalanced classes, can lead to overfitting |

| Better Generalization: Retraining on new datasets enhances the model's ability to generalize | Source Model Dependency: Relies heavily on the quality and relevance of the pre-trained model |

| Overfitting Prevention: Broad data representation reduces the likelihood of overfitting | Computational Complexity: Large pre-trained models can demand significant computational resources |

| Cross-Domain Knowledge Transfer: Enables the application of learned features to different domains, creating versatile character personalities | Interpretability Challenges: Pre-trained models often act like "black boxes", making their decision-making hard to interpret |

While these points summarize the major advantages and challenges, there’s more to consider. Economically, the impact of transfer learning is massive. AI technologies are projected to add trillions to the global economy by 2030. Clarifai highlights the shift in AI development:

"Transfer learning has introduced a new paradigm, allowing AI systems to build upon pre-existing knowledge, thereby streamlining the learning process for novel tasks."

However, maintaining a balance between consistency and adaptability is a constant challenge. AI roleplay characters need to preserve their core personality traits while adjusting to user interactions. Overly specific fine-tuning for a role can sometimes hurt a model’s ability to generalize and weaken its commonsense reasoning.

Other issues, like memory constraints and shallow responses, can also affect the user experience.

Despite these hurdles, the benefits of transfer learning often outweigh its disadvantages when applied thoughtfully. By using well-planned strategies, high-quality training datasets, and ongoing adjustments informed by user feedback, platforms like Luvr AI can create engaging and adaptive AI roleplay experiences. These trade-offs highlight the need for continuous improvement in developing AI roleplay characters.

Future Developments in Transfer Learning for AI Roleplay Characters

AI roleplay characters are advancing at an impressive pace, with transfer learning playing a major role in this transformation. Emerging techniques are shaping more lifelike, interactive, and engaging AI characters, setting the stage for platforms like Luvr AI to deliver highly personalized user experiences.

Learning Across Diverse Domains

One promising direction in transfer learning is its ability to draw from various fields to enrich AI roleplay. For example, researchers have successfully applied transfer learning in areas like medical imaging and retail, improving outcomes such as diagnostic precision and customer engagement while minimizing the need for extensive data.

Techniques like self-supervised learning and federated learning are gaining momentum when combined with transfer learning. These methods allow AI to learn from natural conversational data without compromising user privacy. Such advancements are paving the way for AI characters to adapt dynamically, offering real-time responses that feel more intuitive and natural.

Adapting Instantly to User Preferences

Building on insights from cross-domain learning, adaptive AI systems are now capable of responding instantly to user emotions and preferences. By leveraging reinforcement learning alongside transfer learning, these systems continuously evolve to meet changing user needs. Unlike traditional rule-based models, adaptive AI learns from new patterns and updates its algorithms in real time.

On platforms like Luvr AI, AI characters are becoming more attuned to subtle changes in user behavior, tone, and emotional states. Imagine a virtual companion that acts like a personal coach - identifying emotional cues and crafting tailored responses. Advanced emotional AI can detect shifts in tone and provide empathetic feedback, while techniques like progressive layer freezing allow the AI to adapt to individual preferences without losing its core personality traits.

Merging Voice, Images, and Text for Immersive Experiences

Transfer learning is also driving the integration of voice, images, and text, creating richer, more immersive interactions. Multimodal AI, which combines text, visuals, and audio, is taking human-AI engagement to a whole new level. Platforms like Luvr AI already enable users to exchange images and audio messages, opening the door for even deeper connections.

By blending advanced natural language processing with visual and auditory elements, these systems create interactions that feel more human. For instance, multimodal AI can interpret emotions by analyzing facial expressions, voice tone, and textual sentiment. Future developments could even include augmented reality (AR) and virtual reality (VR) experiences, where AI characters offer real-time visual, auditory, and tactile feedback, making them feel truly present.

Recent advances in prompt engineering are addressing challenges like data integration and scalability. Transfer learning allows AI systems to use pre-trained representations across domains, significantly cutting down on data requirements and training time. This means an AI character trained primarily on text can quickly adapt to handle voice and image inputs with ease.

As cross-domain learning, real-time adaptability, and multimodal communication continue to evolve, AI roleplay experiences are becoming more engaging, responsive, and emotionally intelligent - all thanks to the transformative potential of transfer learning.

Conclusion: Transfer Learning's Impact on AI Roleplay Development

Transfer learning has reshaped the way AI roleplay characters are created, making them more personalized, interactive, and engaging. By using pre-trained models and fine-tuning them with specific data, developers can design AI characters that align with precise personality traits and behaviors - without starting from scratch.

This approach not only cuts down on training time but also makes the technology more accessible. Platforms like Luvr AI benefit greatly from transfer learning, enabling the development of AI companions that adapt to user preferences while retaining their core characteristics. These AI characters evolve through user feedback, refining their responses to better match shifts in tone, behavior, and emotional cues.

On a technical level, transfer learning boosts model performance by utilizing pre-trained knowledge, minimizing overfitting, and allowing faster training even with limited datasets. This makes it a cornerstone for crafting advanced AI roleplay experiences. It also lays the groundwork for next-gen AI characters that can seamlessly combine voice, visuals, and text, delivering deeply immersive interactions.

FAQs

How does transfer learning make AI roleplay characters more dynamic and personalized?

Transfer learning has revolutionized how AI roleplay characters are created. Instead of starting from scratch, pre-trained models can quickly adapt to different styles, genres, or even individual user preferences. This approach not only saves time but also cuts down on the computational resources required, streamlining the entire character development process.

By tapping into extensive pre-existing datasets, transfer learning allows AI to craft characters that feel more distinct and engaging. The result? Highly personalized and immersive roleplay experiences that cater to users' unique tastes, making interactions richer and more tailored.

What challenges can arise when using transfer learning to create AI personalities?

Transfer learning comes with its own set of hurdles when it’s applied to building AI personalities. A major concern is the quality of the pre-trained model. If the base model isn’t aligned with the specific traits or behaviors needed for the personality, the results can be disappointing - either the model struggles to generalize, or it becomes overly rigid. This can make it challenging to develop characters that feel dynamic and engaging.

Another issue lies in adapting to highly personalized or complex characters. These types of personalities are crucial for creating AI that feels authentic and emotionally engaging. However, the process can get tricky when there’s a lack of sufficient data or when data-sharing restrictions come into play. Such limitations make fine-tuning the model more difficult, potentially affecting the overall ability to deliver lifelike and immersive AI roleplay experiences.

How does user feedback help improve AI roleplay characters?

User feedback plays a key role in improving AI roleplay characters. By examining elements like response ratings, interaction trends, and user suggestions, developers can adjust character behaviors, refine accuracy, and craft more engaging experiences.

This continuous refinement helps AI characters align better with user preferences, offering interactions that feel more dynamic and tailored to individual expectations.