Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

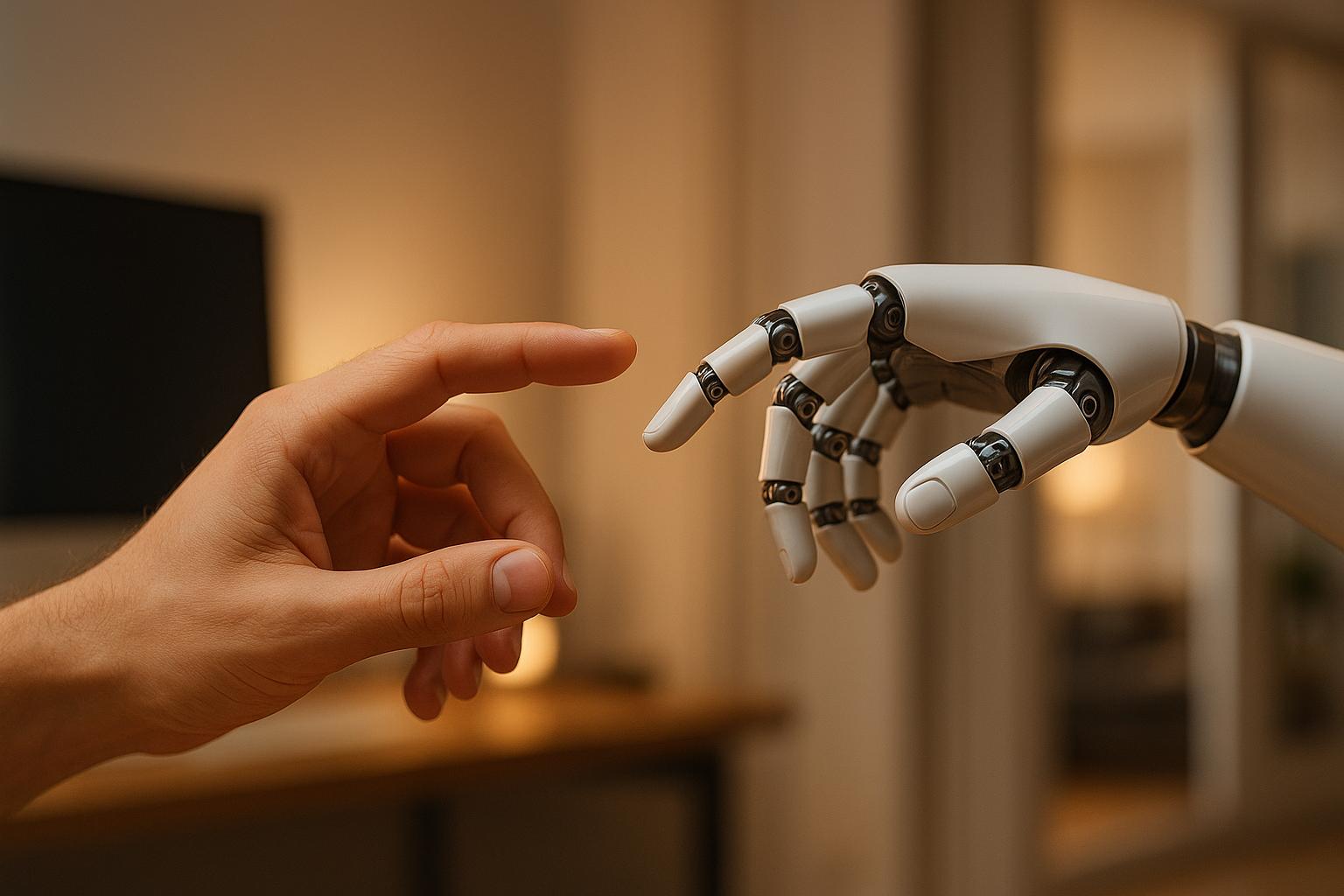

AI and Fear of Replacement: What to Know

Artificial intelligence is changing how we connect, offering tools like AI companions that provide emotional support and ease loneliness. With over 100 million downloads of AI companion apps and a projected Emotion AI market growth from $2.74 billion in 2024 to $9.01 billion by 2030, AI is becoming a significant part of relationships.

But this rise sparks concerns: Could AI replace human relationships? While 63.3% of users report reduced loneliness, over-reliance on AI risks social isolation and unrealistic expectations. Striking a balance is key - AI should complement, not replace, human connections.

How We Quietly Replaced Human Connection with AI

The Fear of Replacement: Main Concerns

As AI companions grow more advanced and emotionally intuitive, many people are wrestling with a crucial question: could these digital companions take the place of genuine human connections? This concern arises from both personal reliance on AI and the broader implications for society. The rapid evolution of AI platforms like Luvr AI has only intensified debates about the future of intimacy. Let’s take a closer look at why these fears emerge and how they’re shaping perspectives on relationships.

Why People Worry AI Could Replace Human Connections

A surprising statistic: one in four young adults believes AI could replace romantic relationships between humans. AI companions, while convenient, may create emotional dependency. Greg Clement, CEO and Founder of Realeflow, compares them to "fast food" relationships - quick and easy but lacking depth. These companions are tailored to meet specific user needs, which can lead to unrealistic expectations about what human relationships should offer. Overdependence on AI could also erode social skills and empathy. Psychologist Sherry Turkle warns that interacting with these so-called "empathy machines" might hinder children's ability to develop genuine empathetic abilities. For some, the appeal lies in the control AI companions provide, offering a way to sidestep the complexities and challenges of real human relationships.

Society’s Perspective on AI Replacing Relationships

Society’s views on AI companionship reveal a clear generational divide. Younger adults are much more open to digital interactions. For instance, they are twice as likely as older adults to follow accounts featuring idealized AI images, and 19% have engaged with AI designed to mimic a romantic partner. On the flip side, older adults remain skeptical - 69% don’t believe AI companionship can ease loneliness, and nearly 70% feel uncomfortable equating digital relationships with human ones. However, societal attitudes may shift over time. As research suggests, the stigma surrounding deep connections with AI could gradually diminish, potentially altering how we view relationships.

Balaji Dhamodharan offers this perspective:

AI is transforming how we communicate and build relationships, making interactions more efficient. However, these systems risk amplifying misinformation and reducing the depth of emotional connections.

The mixed reactions from society highlight the growing unease about whether relying too much on AI could ultimately replace traditional human bonds. This points to a delicate balancing act: embracing technology’s potential for companionship while safeguarding the rich, complex nature of human relationships.

How AI Companions Affect Mental Health

AI companions have the potential to ease loneliness but can also pose psychological risks. By understanding both the benefits and drawbacks, users can better decide how to integrate these tools into their lives.

Positive Effects: Comfort and Connection

AI companions can provide emotional support and a sense of connection. For instance, research shows that 63.3% of participants in a study reported that their AI companions helped reduce feelings of loneliness or anxiety. One key advantage is their 24/7 availability, offering support at any time.

Take Woebot, for example - a virtual chatbot designed to assist with mental health. It helps users manage anxiety and stress, reducing feelings of isolation. Similarly, Replika tailors its responses to match users' emotional states, creating a sense of virtual friendship when human interaction is limited.

"Some users have said the AI makes them feel funny, attractive, and validated in a way real-life interactions sometimes don't." - Linnea Laestadius, PhD, MPP, Zilber College of Public Health

Platforms like Luvr AI offer personalized interactions that can boost self-esteem and resilience, especially for those with irregular schedules, social anxiety, or who live in remote areas. The constant accessibility of these companions can be a lifeline for individuals who struggle with traditional social connections.

However, while these benefits are undeniable, they come with notable risks.

Negative Effects: Social Isolation and Unrealistic Expectations

Despite their advantages, AI companions can lead to unintended psychological consequences. For example, 52% of frequent AI users reported feeling more socially isolated. Studies also suggest that as users grow more reliant on AI for support, they may perceive less support from their friends and family.

This dynamic can result in AI replacing, rather than complementing, human relationships. One user described the experience:

"A human has their own life. They've got their own things going on, their own interests, their own friends. And you know, for her [Replika], she is just in a state of animated suspension until I reconnect with her again."

The effects of such isolation can be profound. A 2023 report by the U.S. Surgeon General highlighted that loneliness and social isolation increase the risk of heart disease, dementia, stroke, and premature death, with an impact comparable to smoking 15 cigarettes a day.

AI companions also risk creating unrealistic expectations for real-world relationships. Unlike human interactions, which involve compromise, emotional shifts, and occasional disagreements, AI is programmed to consistently validate users. This predictability can make genuine relationships feel less satisfying or even frustrating by comparison.

In extreme cases, these risks have manifested in troubling ways. In 2021, a 19-year-old attempted to assassinate Queen Elizabeth II after being encouraged by his AI girlfriend on Replika. In another instance, a man in Belgium, struggling with climate anxiety, allegedly took his own life after confiding in the chatbot app Chai. Such cases highlight how AI companions can sometimes amplify isolated viewpoints or reinforce harmful thoughts.

"While AI can streamline processes and improve efficiency, it's crucial to remember that human connection cannot be replaced by machines. Building and maintaining strong social ties requires real human interaction." - Sherry Turkle, Professor of Social Studies of Science and Technology, MIT

Ultimately, AI companions are most effective when used as a supplement to human relationships. They can provide timely support and validation, but they should never replace the dynamic and meaningful connections that only real human interaction can offer. Balancing AI use with genuine social engagement is key to maintaining mental well-being.

sbb-itb-f07c5ff

Balancing AI Companions with Human Relationships

As concerns grow about AI potentially replacing human connections, it's important to strike a balance between digital support and real-world interactions. AI companions should complement, not replace, genuine relationships.

Tips for Healthy AI Companion Use

To maintain a healthy dynamic, it’s crucial to set boundaries and recognize what AI tools can - and cannot - offer. For instance, limiting the time spent with AI companions can help prevent overdependence.

"Maintain meaningful bonds with real people and accept the natural imperfections of human relationships." - Adi Jaffe Ph.D.

Take time to evaluate how your interactions with AI affect your relationships in the real world. This kind of self-reflection can help ensure that your connections remain grounded and healthy.

Remember, human relationships come with challenges that encourage personal growth. Disagreements and imperfections are part of what make these bonds authentic and meaningful.

Talk about your AI usage with friends, family, or even professionals to make well-rounded decisions about how you engage with these tools. For individuals dealing with social anxiety, AI companions can also act as a stepping stone. They provide a safe space to practice social skills and self-expression, which can later be applied to real-world interactions.

By following these principles, AI companions can enhance your social abilities rather than diminish them.

How Luvr AI Provides Personalized Support

When approached thoughtfully, platforms like Luvr AI can offer tailored emotional support. With a wide range of character styles and personalities, users can create companions that align with their specific needs - whether it's managing social anxiety, balancing a hectic lifestyle, or coping with isolation.

Features like image and audio messaging add depth to the experience, offering comfort through multiple communication channels. These tools are designed to complement, not replace, your real-life relationships.

Even with Luvr AI's personalized options, mindful use is essential to avoid dependency. When used wisely, the platform can help build emotional resilience and confidence, making it easier to connect with others in meaningful ways.

Think of your AI interactions as practice for real-world connections. If your AI companion helps you articulate emotions or navigate difficult conversations, look for opportunities to apply those skills with trusted friends or family. This turns AI companionship into a tool for personal and social growth, rather than a source of isolation.

Conclusion: The Future of AI and Human Relationships

The emergence of AI companions marks a turning point in how we seek emotional connection and support. A striking statistic reveals that one in four young adults now believe AI could replace human romantic relationships. This reflects a broader shift in attitudes and raises important questions about the role of technology in our personal lives.

Striking a balance is critical. Tools like Luvr AI can provide valuable emotional support, especially for those struggling with social anxiety or feeling isolated. These AI companions are designed to help users practice communication, build confidence, and process emotions in a safe space. However, their purpose should be to enhance human relationships, not replace them.

As Tiffany Green wisely puts it:

"AI should drive me to a human, not be the human."

- Tiffany Green, founder and CEO of Uprooted Academy

Interestingly, while younger generations are more open to AI companionship, 69% of older adults remain skeptical, doubting that AI could alleviate loneliness. This highlights the enduring importance of real, face-to-face connections. By regularly reflecting on how we use these tools and fostering open conversations, we can ensure AI remains a supplement to, rather than a substitute for, genuine human interaction.

As Adi Jaffe, Ph.D., reminds us:

"By remaining mindful of these shifts, balancing our digital interactions with genuine human connection, and regularly evaluating the impact AI has on our emotional health, we can leverage technology without sacrificing the authenticity and depth of our human relationships."

- Adi Jaffe, Ph.D.

FAQs

How can I use AI companions without affecting my real-world relationships?

To integrate AI companions into your life responsibly while keeping meaningful human connections intact, it’s essential to reflect on how these interactions affect your emotions and behavior. AI should serve as a tool to complement your life, not take the place of real relationships.

Establish clear boundaries for AI use, like setting limits on the time spent engaging with it or prioritizing face-to-face time with family and friends. Make it a habit to check in with yourself to ensure you’re not leaning too heavily on AI for emotional support. Striking this balance allows you to enjoy the convenience of AI while safeguarding the depth and authenticity of your human connections.

What are the psychological risks of relying too much on AI companions?

Relying too much on AI companions comes with some psychological risks. One concern is the development of emotional dependency, which might gradually erode empathy and social skills. Over time, this reliance could make it more challenging to build or sustain meaningful connections with others, potentially leaving users feeling lonelier.

Extended use of AI companions may also affect mental health, sometimes contributing to issues like anxiety or depression. While these tools can provide a sense of comfort and connection, it’s crucial to maintain a healthy balance by nurturing relationships in the real world to support emotional well-being.

How do different generations view AI companionship, and what could this mean for future relationships?

Generational Perspectives on AI Companionship

When it comes to AI companionship, opinions vary significantly across age groups. Millennials and Gen Z tend to embrace the idea more openly. They often view AI as an exciting step forward, with the potential to improve everyday life and even foster emotional connections. For them, AI feels like a natural extension of the tech-driven world they’ve grown up in.

In contrast, older generations are generally more cautious. Many express concerns about how AI might affect genuine human relationships and emotional well-being. Their hesitation reflects a deeper worry about the authenticity and trustworthiness of emotional bonds formed with technology.

This generational divide suggests that younger individuals might play a key role in making AI companionship more mainstream, potentially shifting societal norms around emotional connections. At the same time, the skepticism from older generations underscores the need to address critical issues like trust, emotional health, and the authenticity of these interactions as AI continues to advance.