Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

AI-generated porn isn't just a new category of adult content; it's a completely different way of creating it. We're talking about explicit, synthetic media cooked up by artificial intelligence, often from nothing more than a few lines of text or by manipulating existing photos. This is a massive shift where complex algorithms, not cameras and studios, are producing hyper-realistic and entirely fictional adult material.

What Is AI Porn and Why Is It Spreading So Fast

At its heart, AI porn is born from generative artificial intelligence. This isn't the kind of AI that just analyzes data; it's designed to create something entirely new.

Imagine you have a supremely talented digital artist who can paint anything you describe. You give them instructions—a scene, a person, a specific act—and they build that image from the ground up, pixel by pixel. That's essentially what these AI models do. This completely cuts out the need for human actors, expensive sets, or film crews, making content creation instantaneous and endlessly personalizable.

So, why is this technology suddenly everywhere? It’s the result of a perfect storm: accessibility, high demand, and mature technology all hitting at the same time. Not long ago, making synthetic media was a job for PhDs with supercomputers. Today, user-friendly apps have torn down those walls, letting literally anyone generate explicit content with a few taps on a screen. This has unleashed a tidal wave of user-generated material that caters to every niche fantasy imaginable—far beyond what traditional porn could ever offer.

The Driving Forces Behind Its Growth

The explosion of AI-generated adult content is no accident. It’s being fed by a few powerful trends that have created the perfect environment for it to thrive. These factors feed into each other, creating a cycle where easy access drives demand, and that demand pushes developers to build even better tools.

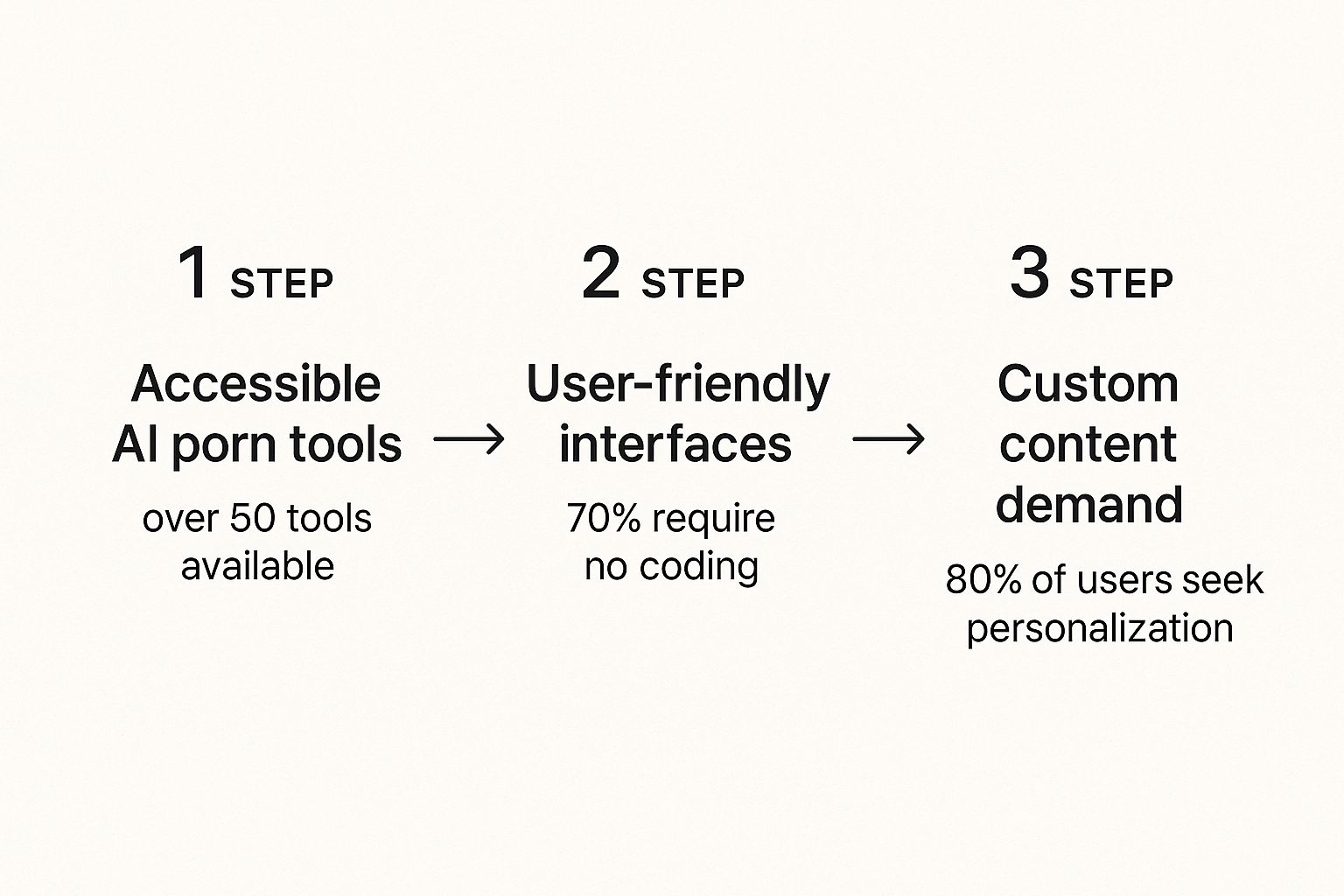

Three key things are really pushing this forward:

- Widespread Tool Availability: Dozens of AI platforms have popped up that specialize in creating adult content. This fierce competition has sparked rapid innovation, making the tools more powerful and bringing them to a mainstream audience.

- Ease of Use: This is the big one. An estimated 70% of these tools require zero coding skills. Their simple, intuitive interfaces mean anyone with a smartphone can now be a creator.

- Unprecedented Customization: The real hook for most people is the power to create perfectly personalized content. In fact, surveys reveal that nearly 80% of users are drawn in by the chance to bring their specific fantasies to life—something conventional porn could never do.

This infographic breaks down the simple three-step loop that’s fueling the market’s growth, from easy-to-use tools to the creation of deeply personal content.

As you can see, it’s a self-reinforcing cycle. Accessible tech meets a deep-seated desire for custom experiences, and the result is explosive growth. While this technological jump offers incredible creative freedom for some, it's also ripping the lid off a Pandora's box of ethical and safety dilemmas that society is just now starting to grapple with.

How AI Creates Hyper-Realistic Adult Content

To really get your head around the explosion of ai porn, you have to pop the hood and look at the technology making it all happen. This isn't some black box magic; it's a specific, trainable process where algorithms learn the essence of visual reality, one pixel at a time. The final images can feel so real because the AI isn't just cutting and pasting. It’s creating from a deep, almost intuitive understanding of what makes a photo look authentic.

Think of an AI model as an apprentice artist who has studied millions upon millions of pictures. It doesn’t just memorize them. Instead, it learns the underlying patterns—the way light plays on skin, the texture of hair, the subtle curves of the human body. This massive library of visual information is its training ground. So, when you feed it a text prompt, it’s not pulling a specific photo; it’s drawing on that vast knowledge to build something entirely new that still follows the rules of our reality.

The truly wild part is how accessible this has all become. What once demanded a team of data scientists and a server farm's worth of computing power can now be done on a website. This shift has turned passive viewers into active creators, opening up a technology with some pretty profound—and often troubling—consequences.

The Dueling AIs: A Forger and a Critic

One of the first big breakthroughs in AI image generation came from something called a Generative Adversarial Network, or GAN. The idea behind it is brilliant in its simplicity. Just imagine two AIs locked in a constant battle of wits.

The first AI is the Generator. Think of it as a master art forger. Its only job is to create fake images that look so convincing they could pass for the real deal. It starts out making garbage—just random digital noise—but it learns and gets better with every single attempt.

The second AI is the Discriminator, which plays the role of a sharp-eyed art critic. Its sole purpose is to spot the forgeries. It’s been trained on a massive database of genuine photos, so it knows what to look for and can sniff out the Generator’s fakes.

The two AIs are locked in a digital duel. The Generator tries to fool the Discriminator, and the Discriminator gets better at catching fakes. This constant feedback loop forces the Generator to produce increasingly realistic and flawless images until they become virtually indistinguishable from reality.

This competitive process is what pushed the boundaries of realism in the early days of AI art. Every failure was a lesson, and after millions of rounds, the forger became a master artist. While newer methods are now more common, GANs laid the critical groundwork for the hyper-realistic ai porn we see today.

Sculpting an Image Out of Digital Static

Lately, a different and even more powerful technique has taken over: diffusion models. If a GAN is like a forger and a critic, a diffusion model is more like a sculptor who starts with a block of digital marble and carves out a masterpiece.

The process starts with a real picture from the training data. The AI then systematically adds tiny amounts of digital "noise"—think of it like TV static—in small steps until the original image is completely gone, leaving just a chaotic mess of pixels. Crucially, the AI records every single step of this destructive process.

After doing this millions of times with millions of images, the AI learns the exact path from a clear picture to pure noise. The real magic happens when you run that process in reverse.

To create a new image, the AI starts with a blank canvas of random static. Guided by your text prompt, it begins walking backward along the path it learned, carefully removing the noise layer by layer. It's like a sculptor chipping away at a block, knowing precisely where to carve to reveal the figure hidden inside. This meticulous, step-by-step refinement allows for an incredible level of detail and control, making diffusion models the go-to for today's best NSFW AI generators.

Ultimately, it’s these sophisticated models—whether the older GANs or newer diffusion techniques—that act as the engine, translating our simple words into complex, lifelike images. Their growing power and ease of use have opened the floodgates, putting the ability to create this content into the hands of anyone with an internet connection.

The Dark Side: Weaponizing AI for Nonconsensual Deepfakes

While the technology behind AI porn can be seen as a neutral tool, its application can be anything but. When these incredibly powerful models are turned against people without their consent, they stop being creative instruments and become weapons of digital violence. This is the grim reality of nonconsensual deepfakes, where someone’s identity is literally hijacked and grafted onto explicit content.

This isn’t some far-off, hypothetical threat. It’s happening right now, every single day. Individuals, the vast majority of whom are women, are finding their faces plastered onto pornographic videos and images they had absolutely nothing to do with. The result is a profound violation that leaves deep, lasting scars—a unique form of abuse that demolishes a person's reputation, mental health, and sense of safety online.

What makes this so insidious is how easy it has become. What used to take a Hollywood VFX team can now be done with a few clicks, turning anyone with a social media profile into a potential target. This accessibility has unleashed a crisis that's growing at an alarming rate, leaving victims feeling helpless and alone.

The Human Cost of a Digital Violation

The damage from nonconsensual deepfake porn goes far beyond a doctored photo. For the person targeted, it’s a full-blown psychological assault.

It completely shatters the line between what’s real and what’s fake, leaving them feeling powerless as a digital counterfeit of themselves is passed around the internet. This "digital doppelgänger" can torpedo relationships, wreck career prospects, and trigger waves of public shaming and harassment. The feeling of violation is immense; their face, their identity, is being used in ways they never agreed to, for an audience they never wanted.

The consequences don't just stay online, either. They bleed into every corner of a victim’s life.

- Emotional Trauma: Victims frequently report severe anxiety, depression, and even symptoms of PTSD. The public nature of the violation can create an overwhelming sense of shame and isolation.

- Reputational Damage: The professional fallout can be catastrophic. People have lost jobs over this, or found it nearly impossible to get hired. Personal relationships with friends and family are often pushed to the breaking point.

- A Constant State of Fear: Once a deepfake hits the web, it's almost impossible to erase it completely. Victims are forced to live with the constant fear that it will resurface, be found by a new boss or partner, or be used to blackmail them.

This isn't just a tech problem; it's a human rights issue. The creation and distribution of nonconsensual deepfake porn is a calculated form of gender-based violence, designed to humiliate, control, and silence its targets.

The sheer scale of this is staggering. A cybersecurity study back in 2019 found that a shocking 96% of all deepfake videos online were nonconsensual pornography, and women were the primary targets. By 2023, the crisis had exploded. One report documented a 550% increase in these videos, with an overwhelming 99% of the victims being female. The data paints a clear picture: this technology is being weaponized against women. You can explore more data on the rise of deepfake pornography and its devastating impact.

A Crisis Demanding Urgent Action

The explosion of nonconsensual AI porn is a massive social failure. It’s a harsh lesson in how fast technology can outrun our laws and ethics, leaving the most vulnerable people completely exposed. Right now, the legal landscape is a messy patchwork of old laws that were never designed to handle this kind of digital abuse.

For victims, trying to get justice is an uphill battle. It's incredibly difficult to track down who made the deepfake, and when the perpetrator is in another country, jurisdictional nightmares begin. This legal gray area has created an environment where abusers can act with almost no fear of being held accountable.

Tackling this crisis is going to take a coordinated effort. We desperately need stronger, clearer laws that explicitly criminalize the creation and sharing of nonconsensual deepfakes. At the same time, tech platforms have to step up and take more responsibility for finding and removing this content. AI developers also have a moral duty to build better safeguards into their tools.

Ultimately, this isn't something one group can solve alone. It's a societal problem that demands a collective response to protect the fundamental rights of consent and identity in the digital age.

Navigating the Legal and Ethical Minefield

The moment AI porn went from a niche tech experiment to a mainstream reality, it slammed head-on into a wall of legal and ethical questions. The technology is moving at a breakneck speed, but our laws are practically standing still. This has created a chaotic gray area where the lines between legal and illegal, right and wrong, are dangerously blurry. We're not just talking about what's allowed; we're debating the very nature of digital identity, consent, and the real-world harm that synthetic fantasies can cause.

This legal confusion is a breeding ground for exploitation. When laws are vague, it’s easy for bad actors to operate in the shadows with little fear of getting caught. For victims of nonconsensual deepfakes, seeking justice becomes a frustrating, often impossible, uphill battle. The root of the problem is that our laws were written for a physical world, leaving them completely unequipped to handle the unique challenges of digital replication and instant, borderless distribution.

The Patchwork of Unclear Laws

As it stands, there is no single federal law in the United States that directly tackles the creation of AI-generated explicit content. What we have instead is a confusing patchwork of state laws, each with its own definitions and penalties. A handful of states have passed laws aimed at nonconsensual deepfakes, but their effectiveness is severely limited.

This creates a jurisdictional nightmare. What happens if someone in a state with no deepfake law creates AI porn of a person living in a state where it is illegal? Who has the authority to prosecute? This lack of legal consistency ties the hands of law enforcement and makes decisive action nearly impossible.

Even when a law is on the books, prosecutors face some massive hurdles:

- Proving Harm: It's tough to legally demonstrate the "harm" caused by a synthetic image, especially when some courts want to see tangible, physical damages.

- Identifying the Creator: Anonymity is the currency of the internet. Tracing a deepfake back to the person who actually made it can be a monumental task for investigators.

- Freedom of Speech Arguments: There's a persistent debate over whether creating AI-generated images, even explicit ones, counts as protected speech, which seriously complicates legal challenges.

This legal vacuum sends a pretty clear message to those with malicious intent: the risks are low, and the chances of getting caught are even lower. This reality just fuels the creation and spread of harmful, nonconsensual content, leaving victims with almost nowhere to turn.

Ethical Questions Without Easy Answers

Beyond the legal mess lies an ethical quicksand that's even trickier to navigate. At the very heart of it all is consent. When an AI generates a photorealistic image of a person, that individual never agreed to have their likeness used that way. It’s a profound violation of their digital identity, essentially turning a piece of who they are into a commodity for someone else's fantasy without a shred of permission.

The normalization of this kind of nonconsensual content is deeply troubling. When anyone can create AI porn that looks exactly like a real person—a celebrity, an ex-partner, a coworker—it erodes the line between fantasy and reality. This can desensitize users to the very real harm of objectification and subtly reinforce the toxic idea that a person's image is public property, free to be manipulated for anyone’s gratification.

Then there’s the look-alike problem. Many platforms have rules against depicting real people, but what about an AI character that's almost identical to someone? This ambiguity provides plausible deniability while still enabling content that clearly targets specific individuals. It's a challenge that platforms like Luvr AI are grappling with, leading them to define clear policies around what constitutes blocked or prohibited content in an effort to protect real people.

Ultimately, the technology forces us all to ask some uncomfortable questions. Where do we draw the line between creative expression and digital exploitation? And who's responsible when these powerful tools are used to cause harm—the developer who built the AI, the platform hosting the content, or the user who clicked "generate"? Without clear legal and ethical frameworks, we risk stumbling into a digital future where consent is optional and personal identity is up for grabs.

The Escalating Threat of AI-Generated CSAM

As we explore the world of AI porn, we inevitably run into its darkest, most disturbing application: the creation of synthetic Child Sexual Abuse Material (CSAM). This isn't just a gray area. This is where the technology crosses an absolute, non-negotiable line, shifting from ethically tricky adult content into the territory of pure criminality and moral horror.

The same generative AI that creates adult fantasies can be twisted to produce photorealistic images and videos depicting the abuse of children. Let's be perfectly clear: while these images don't show real children being harmed in that moment, that distinction offers zero relief, legally or morally. The content itself is illegal, deeply damaging, and represents a grave threat we simply cannot ignore.

This synthetic CSAM throws a massive wrench into the works for law enforcement and child protection agencies. It blurs the lines between real and fake abuse, forcing investigators to waste precious time figuring out what's what. Every minute spent analyzing a synthetic image is a minute they can't spend rescuing a real child from harm. This complication gives offenders a terrifying new tool to generate this vile material on demand.

The Alarming Scale of Synthetic Abuse

This isn't some far-off problem we need to worry about in the future. The explosion of AI-generated CSAM is a full-blown crisis happening right now in the hidden corners of the internet. The speed and scale at which this material is being churned out is unlike anything we've ever seen before.

The numbers are staggering. In just one month in 2023, the Internet Watch Foundation (IWF) discovered over 20,000 AI-generated child abuse images on dark web forums. Out of those, more than 3,000 showed clear criminal acts of abuse, with thousands more being classified as illegal pseudo-photographs under UK law. This terrifying surge has finally pushed lawmakers to scramble for a response, with new legislation like the PROACTIV AI Data Act being introduced in the U.S. Senate.

A Call for Uncompromising Safeguards

The very existence of AI-generated CSAM places a huge ethical weight on the shoulders of every AI developer and platform out there. The old argument that "AI is just a neutral tool" completely falls apart when that tool is used to simulate the most depraved crimes imaginable. There's no room for debate here.

Developers have a profound moral and social duty to build powerful, ironclad safeguards into their systems to stop this from happening.

This goes way beyond just playing whack-a-mole with content after it's been created. It means getting ahead of the problem with proactive measures, including:

- Training Data Filtration: Scouring datasets to remove any and all material that could possibly be used to generate illegal content.

- Prompt-Level Blocking: Using sophisticated filters that block keywords, concepts, and image combinations tied to minors and abusive acts.

- Output Detection Systems: Building powerful classifiers that can automatically identify and flag synthetic CSAM before a user ever sees it.

The potential for misuse is so catastrophic that a zero-tolerance policy is the only acceptable path forward. Any platform involved in generative AI must treat the prevention of CSAM as its highest and most urgent priority.

This is a responsibility that platforms like Luvr AI take incredibly seriously. A strict, enforceable underage content policy isn't just a legal checkbox; it's a fundamental pillar of operating responsibly. Anything less is a catastrophic failure to protect the most vulnerable among us and a complete abandonment of corporate duty.

Where We Go from Here: The Future of AI Content and the Global Regulatory Maze

The world of synthetic media isn't just growing—it's exploding. What we're seeing right now is just the first tremor of a massive earthquake set to reshape how we create and interact with digital content. This technology is getting smarter, cheaper, and woven into the fabric of our lives at an astonishing speed, pointing to a future where AI-generated content is as common as a selfie.

But with this incredible momentum comes a tidal wave of challenges that we're only just beginning to grapple with. The very qualities that make this tech so powerful—its speed, its global reach, and the anonymity it offers—also make it a regulator’s worst nightmare. We're stepping into an age where telling real from fake will be one of our biggest digital headaches.

The Coming Tsunami of Synthetic Media

It’s hard to wrap your head around the sheer scale of this. We're not talking about a slow, gradual increase; we're witnessing an exponential surge in the creation of synthetic media, especially deepfakes. This is a tidal wave of content that is already swamping our detection tools and legal systems.

The numbers are just staggering. A 2025 briefing from the European Parliament projected that by the end of 2025, around 8 million deepfake videos will be circulating globally. That’s a jaw-dropping 16-fold jump from the 500,000 shared in 2023. Even more concerning, experts report that nearly 98% of this material is pornographic. This volume makes manual content moderation a joke and turns automated detection into a frantic, endless game of whack-a-mole. You can read the full analysis on this dramatic surge in synthetic media and its risks754564_EN.pdf) for a deeper dive.

The Borderless Problem of Digital Harm

One of the thorniest issues here is that the internet couldn't care less about national borders. Someone in one country can create nonconsensual ai porn of a person living on the other side of the planet, sparking a legal and jurisdictional firestorm.

This creates a puzzle that feels almost impossible to solve:

- Whose laws even apply? Is it the creator's country? The victim's? The country where the server is hosted?

- How do you enforce anything? Even if a court issues a ruling, getting it enforced across international lines is a bureaucratic nightmare, often requiring complex treaties that don't exist.

- Anonymity is the perfect shield. Perpetrators can easily hide behind VPNs and throwaway accounts, making it a massive challenge for law enforcement to even figure out who they are.

This borderless reality means any attempt to regulate this with purely national laws is destined to fail. A patchwork of different rules just creates loopholes that bad actors are more than happy to jump through.

Lasting solutions cannot be found in isolation. The global nature of this problem demands an equally global response, built on international cooperation and harmonized legal standards. Without it, we're just building digital fences with gaping holes.

Why Tech Alone Can't Be the Answer

While new regulations like the EU's AI Act are a solid first step, they shine a spotlight on a fundamental truth: technology can't solve the problems that technology creates. Detection algorithms will always be a step behind the generation models they're trying to police. For every new watermark or digital signature someone invents, someone else is already working on a way to break it.

A real strategy has to be a multi-front war. It needs lawmakers to step up and create clear, enforceable international laws. It needs tech companies to take genuine responsibility for the power of the tools they release. And maybe most importantly, it needs a massive public education effort to build digital literacy so people understand the risks. The future of ai porn and synthetic media isn't just a technical problem—it's a deeply human one, and it will take all of us working together to navigate it safely.

Ready to explore the creative and responsible side of AI companionship? With Luvr AI, you can design unique AI characters and engage in immersive, private conversations built on a platform that prioritizes safety and user control. Discover the possibilities at https://www.luvr.ai.