Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

The simple truth is, your chats with an AI aren't truly private. When you're talking with a character on a platform like Character.AI or Luvr AI, the company is also part of the conversation. They collect and analyze your chats to train their models and make the service better.

Even with privacy policies in place, your interactions are accessible to the company. They just aren't confidential in the way a private message to a friend would be.

How Private Are Your Character AI Chats, Really?

Chatting with an AI can feel incredibly personal, almost like sharing secrets with a digital confidant. But what actually happens to those conversations once you hit send?

Think of it this way: your chat history is like a diary, but you're not the only one reading it. The AI platform is essentially looking over your shoulder, learning from every single word you type. This isn't necessarily malicious; it's how these systems get better.

Your interactions—the questions you ask, the stories you tell, your unique way of phrasing things—all become part of the AI's "textbook." It studies this massive collection of user data to become more realistic, engaging, and human-like. This is the fundamental trade-off: you get a more lifelike experience, but in return, you're providing the raw data to make it happen. And that's where the critical questions about character AI privacy come into play.

The Core Privacy Equation

The real issue isn't just that data is being collected, but what kind of data is collected and why. These platforms typically gather more than just your chat logs. They also track behavioral data (like which characters you talk to most and how long your sessions are) and technical details from your device.

Sure, this information helps tailor the experience to you, but it also paints a remarkably detailed picture of your personality, interests, and habits.

While this data exchange is usually spelled out in the fine print of the terms of service, the full implications can be easy to miss. For instance, your chats could be flagged for review by human moderators for safety checks or anonymized and bundled into massive datasets for research. The bottom line is that your conversations are treated as a company asset, not as a truly private communication.

Your chats with an AI aren't a two-way street. They're a three-way conversation between you, the AI, and the company that owns it. Understanding this is the first step toward protecting yourself.

To help you get a handle on this, let's quickly map out the main areas of concern. The table below gives you a snapshot of the primary risks and what you can do about them.

Quick Guide to Character AI Privacy Risks and Protections

This table summarizes the primary privacy concerns associated with Character AI platforms and the corresponding protective measures users should be aware of.

| Area of Concern | What It Means for You | Key Protective Action |

|---|---|---|

| Data Collection | Your chat logs, preferences, and device info are stored and analyzed. | Avoid sharing personally identifiable information (PII) like your real name or address. |

| Model Training | Your conversations are used to improve the AI's future responses. | Regularly review and delete chat histories from your account if the platform allows. |

| Human Review | Employees may read chats to enforce safety policies or debug issues. | Assume your conversations are not fully confidential and adjust what you share accordingly. |

| Security Risks | Your data could be exposed in the event of a security breach. | Use a unique, strong password and enable two-factor authentication (2FA) if available. |

Think of this as your starting point. Now that you know the landscape, we can dive deeper into each of these areas and give you more specific strategies to navigate the world of AI companions safely.

How Character AI Uses Your Conversations to Learn

When you're chatting with an AI character, it can feel like a completely private, one-on-one conversation. But behind the curtain, your words are doing more than just driving that specific exchange—they’re actively training the AI itself.

Think of the AI as a student and your conversations as its personal textbook. Every message you type, every question you pose, and every scenario you dream up becomes another lesson for it to learn from. This isn't just a side effect; it's the core process that allows these platforms to function and get better.

This constant "learning" is how an AI develops a richer personality, picks up on subtle human nuances, and fine-tunes its ability to give responses that feel real. For platforms like Luvr AI, the main objective is to craft an experience so immersive it feels seamless, and your data is the raw material that makes it all possible.

The Data That Feeds the AI

To really get a handle on character AI privacy, you need to know what kind of information is actually being collected. It's not just the text of your chats. These platforms are usually gathering several layers of data to paint a full picture of your interactions.

This collection is surprisingly thorough, designed to capture the entire context of how you use the service. From the company's point of view, this information is critical for personalizing your experience and training their language models to be more effective and engaging for everyone.

Here’s a look at the typical data points these platforms are collecting:

- Chat Logs and History: This is the most obvious one. The complete transcript of your conversations is stored and analyzed, including every single thing you say and how the AI responds.

- Behavioral Data: This is all about how you use the platform. It tracks which characters you talk to, how long and how often you chat, and which features you tend to use the most.

- User Preferences: Any personal touches you add—like creating a custom character or tweaking personality traits—are recorded. This gives the platform direct insight into what users find most interesting.

- Device and Technical Information: This includes technical data like your IP address, browser type, operating system, and unique device identifiers. It’s mostly used for security, analytics, and making sure the service works smoothly on different devices.

Why Is This Data Collection Necessary?

From the platform's perspective, gathering all this data isn't optional—it's essential for their survival and growth. They’d argue it’s the only way to provide the kind of sophisticated, personalized interactions that users expect. Without it, the AI would feel flat, generic, and stuck in time.

This trade-off—your data for a better service—has fueled incredible growth in the AI companion industry. By early 2025, Character.AI had already attracted 20 million active users worldwide, establishing itself as a dominant force. While this shows just how many people are embracing AI companionship, it also amplifies the privacy concerns. To work, these systems chew through enormous amounts of personal data, and if that data isn't handled carefully, it creates real risks.

Every interaction you have is a lesson for the AI. The data helps it learn what works, what doesn't, and how to become a more believable companion for you and everyone else on the platform.

Your seemingly private chats are, in fact, valuable assets used to train powerful models, which makes clear and honest data policies an absolute must. You can dive deeper into the Character.AI statistics and growth on Business of Apps.

It’s this continuous feedback loop that allows the AI to evolve. For example, if a certain style of humor gets positive reactions from many users, the model learns to use it more often. On the flip side, if a response consistently comes across as confusing or creepy, the system learns to avoid similar phrasing down the road.

Ultimately, this entire process boils down to the central compromise of using AI character platforms. You get an increasingly realistic and customized experience, but the price of admission is your conversational data. Grasping this exchange is the first step toward making smarter choices about your privacy online.

Of course. Here is the rewritten section with a more natural, human-expert tone.

The Hidden Risks of Sharing Personal Data with AI

It’s one thing to know that AI platforms use your chats to train their models. That’s the basic trade-off. But the real risks to your character AI privacy run much deeper than just helping an algorithm get smarter. Think of it like this: your data is locked away in a digital vault. Even the most secure vaults have potential weak points, and it's smart to know exactly what they are.

When you open up to an AI—sharing personal stories, secrets, or even just creative fantasies—that information gets added to a colossal dataset. The most obvious and immediate threat here is a data breach. No company, not even a giant like Luvr AI, is completely safe from hackers. If their systems are ever compromised, your most private conversations could suddenly be all over the internet.

This isn't like losing a credit card number you can just cancel. The exposure of intimate conversations can cause real, lasting harm—from emotional distress and damage to your reputation all the way to blackmail. Details you shared thinking they were private could become public knowledge in an instant.

The Myth of "Anonymous" Data

Platforms love to say they "anonymize" the data they use for training, which is supposed to mean they strip out all your personal details. The problem is, truly effective anonymization is incredibly difficult to pull off. Time and again, researchers have proven it's possible to re-identify people from so-called anonymous data just by connecting the dots with other public information.

Let's say over several chats, you mention your hometown, your job, and a unique hobby. Each tidbit seems harmless alone. But for someone determined, those three pieces of information could be enough to pinpoint exactly who you are. This process, known as de-anonymization, can turn what you thought were innocent chat logs into a serious privacy vulnerability.

The promise of "anonymized data" often creates a false sense of security. Your unique way of talking and the specific details you share can act like a digital fingerprint, making you far more identifiable than you realize.

This is a huge deal, especially since privacy is a top concern for anyone using these character AIs. The platforms need your personal details to give you personalized, realistic responses. But that very practice creates a risk if the data isn't handled perfectly. It's a tricky balancing act, and even with today's technology, the trade-offs between a great experience and solid character AI privacy are very real. Experts consistently advise caution, a point you can see explored in more detail in this breakdown of character AI's complex privacy pros and cons on Digital Defynd.

Your Data Develops a Life of Its Own

Another risk we don't often think about is how our data gets used for things we never agreed to. A platform’s main goal might be training its own AI, but your chat history is an incredibly valuable asset that can be used in other ways.

What kind of other ways?

- Hyper-Targeted Ads: Details from your chats—your hopes, fears, and interests—can be used to build a scarily accurate advertising profile. Suddenly, you're seeing ads that seem to know exactly what you've been thinking.

- Selling Data to Others: While most privacy policies claim they won't do this, the temptation is always there. "Anonymized" (or poorly anonymized) chat data could be sold to data brokers or market research firms.

- Training Other AIs: Your heartfelt conversations about relationships could end up being used to train a completely unrelated customer service bot or an AI model with a totally different set of ethical rules.

The moment you hit send, you lose a degree of control. Your words can be copied, sold, and repurposed in ways you never imagined.

The Limits of Encryption

To keep your data safe, platforms use encryption. This essentially scrambles your messages so that only authorized parties can read them. They do this both "in transit" (as data travels from your phone to their servers) and "at rest" (while it's stored). This is just standard-issue security for any online service.

But encryption isn't a magic shield. The company itself holds the keys to unlock your data. They have to—it's how they analyze your chats for AI training and how human moderators can review conversations for safety issues. So, while encryption is great at protecting you from outside hackers, it offers zero protection from the company itself. To them, your data is an open book, forcing you into a chain of trust you simply have to accept.

Understanding Your Data Privacy Rights

Knowing the risks of sharing your data is one thing. But to truly be in the driver's seat of your character AI privacy, you need to know your rights. It’s easy to get lost in the alphabet soup of regulations like Europe’s GDPR and California’s CCPA, but these laws give you real power.

Let's cut through the legalese. Think of these rights not as complicated legal theories, but as a set of tools you can use to manage your digital life on platforms like Luvr AI.

Your Core Data Rights Explained

When a company like an AI platform collects your information, you aren't just handing it over for good. You're loaning it to them. And just like with anything you lend out, you have the right to ask for it back, check on its condition, or ask them to fix something that's wrong.

Here are the key rights you should know about:

- The Right to Access: You can demand a full copy of every piece of data a company has on you. On a character AI platform, this means everything from your chat logs and account preferences to your activity patterns.

- The Right to Rectification: Find a mistake in your data? You have the right to tell the company to correct it. Simple as that.

- The Right to Erasure (The "Right to be Forgotten"): This is a big one. It gives you the power to ask a company to wipe your personal data from their servers, permanently.

- The Right to Restrict Processing: This lets you tell a company to stop using your data for certain things, even if they still hold onto it.

- The Right to Data Portability: You can request your data in a clean, organized, and machine-readable file. This makes it easy to take your information and move it to a different service if you want to.

These rights completely change the game. You're no longer just a passive user whose data is collected. You become an active manager of your own information, with the authority to decide how it’s used.

The image below, from the official GDPR information portal, gives a great visual of how these protections are designed to work together.

At its core, this framework demands that your data be handled legally, fairly, and with total transparency. It’s all about holding companies accountable. For a specific look at how this plays out, you can always check out the Luvr AI Privacy Policy to see their commitments.

The Spotlight on AI and Minors

Regulators are keeping a very close eye on AI platforms, especially when it comes to younger users. The responsibility to protect minors is no longer just a "nice-to-have"—it's a strict legal necessity.

We saw this firsthand in early 2025 when the Texas Attorney General launched an investigation into Character.AI and other services. This inquiry, driven by new laws like the SCOPE Act, is digging into whether these platforms are meeting their obligations for protecting children's privacy, which includes getting clear parental consent. This move sends a clear message: governments are getting serious about enforcement as AI becomes more woven into the lives of teens. You can get more details on the investigation into AI platforms and children's privacy on Lewis Silkin's site.

This rising regulatory heat is pushing AI companies to be more transparent and act more responsibly. Knowing that these legal protections exist gives you the confidence to exercise your rights and hold these platforms to their word.

Practical Steps to Safeguard Your AI Privacy

Alright, knowing the risks is one thing, but now it's time to roll up our sleeves and do something about it. Protecting your character ai privacy isn't about ditching AI companions altogether. It's about being smart and taking back control of your digital life.

Think of it like this: you wouldn't leave your front door unlocked, would you? The same principle applies here. We're going to proactively install a better lock on your digital front door instead of just hoping for the best.

Adjust Your Privacy Settings Immediately

Let's be real—most AI platforms, and that includes apps like Luvr AI, don't set up their default settings with your best interests at heart. They're usually configured for maximum data collection, not maximum privacy. It’s up to you to dive in and flip those switches.

First things first, find the "Privacy" or "Data Settings" section in your account menu. You're hunting for options that control your chat history, how your data is used for training the AI, and personalization. The mission is to clamp down on how much of your activity gets stored and analyzed.

- Turn Off Chat History: This is a big one. If the platform gives you the option to stop saving your chat logs, do it. It’s the single best way to keep your conversations from being used for AI training or getting scooped up in a data breach.

- Opt Out of Model Training: Many services let you pull your data from the pool used to train their AI models. If you see this option, always take it.

- Limit Personalization: Sure, it might make the AI feel a little less like it "knows" you, but turning off heavy personalization can significantly cut down on the behavioral data the platform gathers.

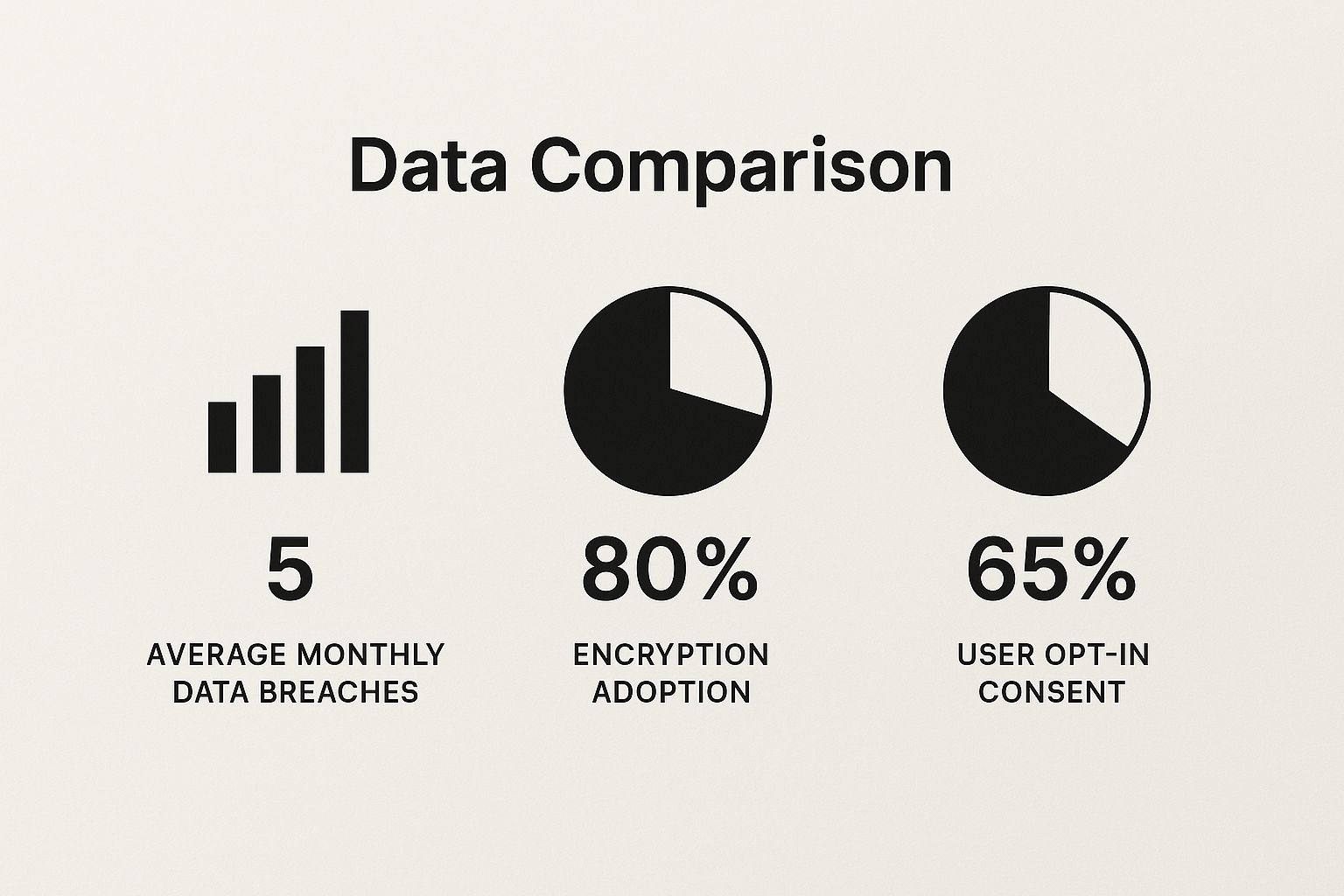

This infographic paints a pretty clear picture of why taking these steps is so important across the industry.

You can see the gap between standard security practices and how often users are actually asked for consent. That's a gap you need to close yourself by actively managing your settings.

Default vs. Recommended Privacy Settings

The out-of-the-box settings are rarely the best ones for your privacy. To help you see what needs changing, here’s a quick comparison of what you typically find versus what you should aim for.

| Setting | Typical Default Status | Recommended Action |

|---|---|---|

| Chat History Storage | Enabled by default | Disable. This is your first line of defense, preventing long-term storage. |

| Data for AI Training | Opt-in by default | Opt-out. You should have to give explicit permission, not have it assumed. |

| Personalized Ads | Often enabled | Disable. Stop your chats from being used to sell you things. |

| Account Visibility | Public or semi-public | Set to Private. Lock down your profile so strangers can't see your activity. |

Making these adjustments is a huge step toward a more secure experience.

Be Mindful of What You Share

This is the golden rule of character ai privacy, and it comes down to a simple change in mindset. Treat every single conversation with an AI as if it could end up on a public billboard one day.

Never, ever share personally identifiable information (PII). I'm talking about your full name, home address, phone number, email, where you work, or any financial details. A legitimate AI companion simply doesn't need to know these things.

Even tiny, seemingly innocent details can be stitched together like a quilt to figure out who you are. Steer clear of mentioning specific local spots, unique job titles, or deeply personal stories that could be traced back to you.

Finally, always remember who you're really talking to: a program governed by a company's policies. The friendly AI character isn't the one making the rules about your data. To get a real sense of these rules, it pays to check out the official documents. For instance, reading the Luvr AI Terms of Service gives you a good example of what these policies actually contain. By taking these hands-on steps, you can build a much safer relationship with AI technology.

What's Next for Privacy and AI Companions?

The world of character AI privacy isn't sitting still. As these AI companions weave themselves more deeply into our daily routines, the technology—and the rules that govern it—are changing fast. We're seeing a constant tug-of-war between the push for more immersive experiences and the pull for stronger, more robust personal protections.

One of the most exciting developments on the horizon is on-device processing. Imagine your AI companion thinking locally on your phone or computer, instead of sending your private chats across the internet to a company server. This simple shift keeps your raw data in your hands, allowing the AI to learn your preferences without ever needing to share your confidential conversations.

New Tech to Protect Your Data

Another game-changer is federated learning. It's a clever method that lets a central AI model get smarter by learning from thousands of users without ever seeing their actual data. Instead of uploading your conversations, your device processes them locally and sends only tiny, anonymous learning updates back to the main system.

Think of it like a group of chefs all trying to perfect a single recipe. Each chef experiments in their own private kitchen (your device), then sends anonymous notes to the head chef (the central AI) about what worked. The head chef combines these notes to improve the master recipe for everyone, but never sees what's happening inside any individual kitchen.

Technologies like these could completely reshape the privacy conversation. They open the door to having a highly personalized AI friend that doesn't need a key to your digital diary.

Shifting Rules and Responsibilities

Regulators are finally catching on that AI isn't just some tech-world fad. As public awareness grows, we can expect more laws aimed squarely at conversational AI. These new rules will likely demand stricter consent from users, force companies to be transparent about how our data is used for training, and draw clear lines of accountability when things go wrong.

The industry is at a pivotal moment, too. Companies like Luvr AI are constantly pushing the boundaries of what's possible, and they know that keeping user trust is just as important as building better tech. Checking out the current Luvr AI features can give you a good idea of how platforms are trying to balance that immersive experience with user control.

Navigating this new territory comes down to three core principles:

- Be Informed: Know what you're giving up for the experience. Understand the privacy trade-offs.

- Be Cautious: Treat your AI chats as if they could be read by someone else. Don't share your deepest secrets or financial details.

- Be Proactive: Dive into the privacy settings. Don't be afraid to exercise your rights to access or delete your data.

By staying engaged and aware, you can explore the fascinating world of AI companionship without losing control of your personal information. The future is personal, and your privacy is definitely worth protecting.

Frequently Asked Questions About Character AI Privacy

It's natural to have a few lingering questions after digging into the world of AI companions, their risks, and your rights. When it comes to character AI privacy, getting straight answers is the only way to feel confident about the choices you make. Let's clear up some of the most common concerns.

Can Character AI Employees Read My Chats?

The simple answer is yes, but it's not a free-for-all. Your chats aren't being live-streamed to a room full of employees. However, platforms like Luvr AI do give authorized staff the ability to review conversations, but only under very specific conditions.

Think of it less like an open diary and more like a safety deposit box that a bank manager can open with a second key in an emergency. This access is usually reserved for:

- Safety and Moderation: If a chat gets flagged for breaking the rules (like discussions about illegal acts or self-harm), a human moderator might need to step in, read the exchange, and decide what to do next.

- Debugging and Quality Assurance: Sometimes the AI just acts weird or breaks. When that happens, an engineer might need to look at the exact chat that caused the glitch to figure out how to fix the system.

- Responding to Your Reports: If you flag a problem with an AI's behavior or a conversation, the support team will almost certainly need to read the chat history to understand what went wrong and help you out.

So, while your private chats aren't public knowledge, they aren't totally shielded from the company that runs the service.

Your conversations with an AI are private from the public, but not necessarily from the platform provider. It's crucial to operate under the assumption that your chats could be reviewed for legitimate operational reasons.

Does Deleting a Chat Permanently Remove It?

When you hit that "delete" button, the conversation disappears from your screen. Poof. But what's happening behind the curtain on the company's servers is a whole different ballgame. Deleting a chat from your view rarely means it's been instantly wiped from existence.

It’s a bit like putting a sensitive file through your office shredder. The paper is in pieces, but a determined person could still tape it back together. For AI platforms, your "deleted" data often sits in backups for a while, just in case of a server crash or for legal reasons. While your "right to erasure" is a powerful tool that lets you formally ask for permanent deletion, it's a separate step you have to take, and even then, it might not happen overnight.

Is It Safer to Use Character AI Without an Account?

Jumping into a character AI platform as a guest might feel like a savvy privacy hack, but it’s a double-edged sword. On one hand, you're not tying your chats directly to your personal email or identity, which keeps your sessions more isolated and anonymous.

On the other hand, registered users usually get far more control. An account is often your ticket to accessing privacy dashboards, reviewing your full chat history, and, most importantly, formally requesting that your data be deleted. These are features guests typically can't use.

The best strategy? Get the best of both worlds. Sign up using an anonymous email address that isn't tied to your real name. This gives you the anonymity of a guest but with the full control of a registered user.

Ready to explore AI companionship with a platform that puts your experience and control first? Discover lifelike characters and create your own fantasy worlds with Luvr AI. Start your adventure today.